Are you ready to unlock the power of artificial intelligence using the Qwen1.5-MoE-A2.7B model with MLX? This article will guide you through the process of installing and using the model seamlessly for text generation.

Step-by-Step Guide to Setup

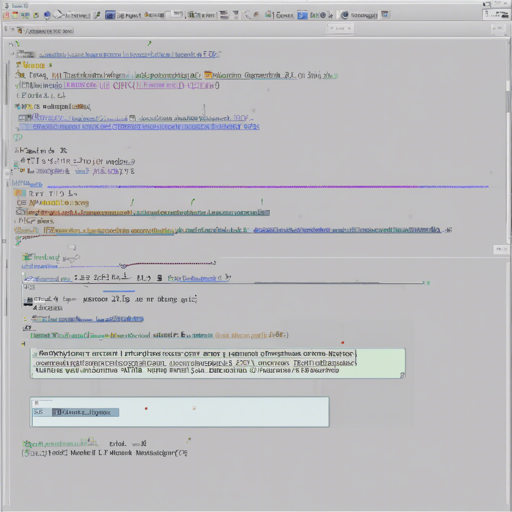

- Install MLX: First, ensure you have the MLX library installed in your Python environment. Use the command below to install it via pip:

- Load the Model: Next, import the necessary functions from MLX and load the Qwen1.5-MoE-A2.7B model.

- Generate Text: Now, you can create a text prompt and generate a response with the model. Here’s how you do it:

pip install mlx-lmfrom mlx_lm import load, generate

model, tokenizer = load('mlx-community/Qwen1.5-MoE-A2.7B-4bit')response = generate(model, tokenizer, prompt='Write a story about Einstein', verbose=True)Understanding the Code through Analogy

Think of using the Qwen1.5-MoE-A2.7B model as preparing a gourmet meal. The installation of MLX is akin to gathering all the ingredients and kitchen tools you need before you begin cooking. Once everything is set, loading the model is like preheating your oven to make sure it’s ready when you are. Finally, generating a response with the model is similar to putting your ingredients into the oven and waiting for the delicious results. Just as with cooking, the preparation leads to a fantastic outcome!

Troubleshooting Common Issues

If you run into any issues while setting up or using the Qwen model, here are a few troubleshooting tips:

- Module Not Found Error: Make sure you have successfully installed the MLX library and check if you are using the correct Python environment.

- Model Not Loading: Double-check the model name to ensure it matches the one provided (mlx-community/Qwen1.5-MoE-A2.7B-4bit).

- Error in Generating Response: Ensure your prompt is well-defined. If it’s too vague, the model might struggle to produce meaningful text.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Using the Qwen1.5-MoE-A2.7B model with MLX can enhance your text generation tasks significantly. Just follow the steps above and enjoy the capabilities of this model!

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.