The RinnaYouri-7B Instruction Model is a robust tool designed for transforming Japanese text efficiently. This blog article will walk you through the process of using this model while providing you with troubleshooting tips along the way.

Overview

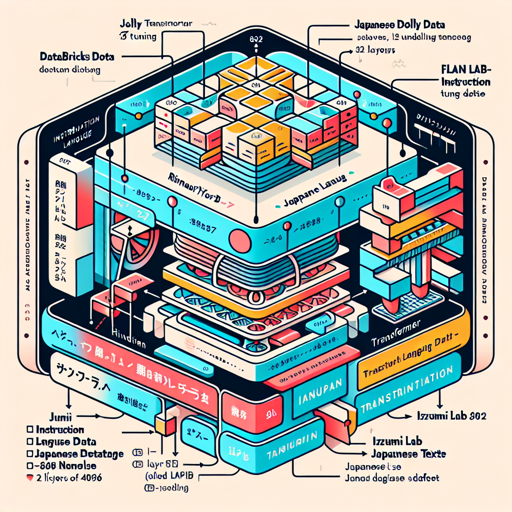

This language model is an instruction-tuned version of rinnayouri-7b, based on a transformer architecture with 32 layers and a hidden size of 4096. It has been fine-tuned using several datasets to enhance its performance.

Fine-tuning the Model

- Databricks Dolly data

- Japanese Databricks Dolly data

- FLAN Instruction Tuning data and its Japanese translation

- Izumi lab LLM Japanese dataset

Additional sections from various corpora contribute to its improved accuracy while ensuring data integrity by avoiding data leakage.

How to Use the Model

To get started, make sure you have the necessary libraries installed. Here is a step-by-step guide:

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("rinnayouri-7b-instruction")

model = AutoModelForCausalLM.from_pretrained("rinnayouri-7b-instruction")

if torch.cuda.is_available():

model = model.to('cuda')

instruction = "次の日本語を英語に翻訳してください。"

input = "大規模言語モデル(だいきぼげんごモデル、英: large language model、LLM)は、多数のパラメータ(数千万から数十億)を持つ人工ニューラルネットワークで構成されるコンピュータ言語モデルで、膨大なラベルなしテキストを使用して自己教師あり学習または半教師あり学習によって訓練が行われる。"

prompt = f"以下は、タスクを説明する指示と、文脈のある入力の組み合わせです。要求を適切に満たす応答を書きなさい。### 指示: {instruction} ### 入力: {input} ### 応答:"

token_ids = tokenizer.encode(prompt, add_special_tokens=False, return_tensors='pt')

with torch.no_grad():

output_ids = model.generate(

token_ids.to(model.device),

max_new_tokens=200,

do_sample=True,

temperature=0.5,

pad_token_id=tokenizer.pad_token_id,

bos_token_id=tokenizer.bos_token_id,

eos_token_id=tokenizer.eos_token_id

)

output = tokenizer.decode(output_ids.tolist()[0])

print(output)

This code serves as a bridge to send instructions and receive translations. Think of it as a well-organized library where each section (or code block) holds a specific function. You gather the books (code lines) you need, put them in your backpack (tokenization), and then head to the reading room (using the model) to interpret the texts (generate output).

Tokenization

The model employs the original Llama-2 tokenizer for seamless text processing.

Troubleshooting

If you encounter issues while running the model, consider the following troubleshooting tips:

- Ensure that you have installed the

transformerslibrary correctly. - Check if your system supports CUDA if you’re trying to run it on a GPU.

- Review the input format as improper formatting could lead to errors.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.