Welcome to our guide on utilizing the SpeechTokenizer, a revolutionary tool designed for speech large language models! Whether you’re a developer, researcher, or just curious, this article will help you navigate the installation, usage, and troubleshooting of this innovative software.

What is SpeechTokenizer?

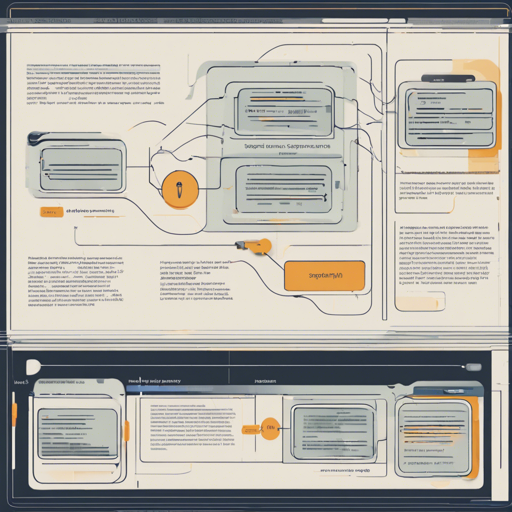

SpeechTokenizer is a unified speech tokenizer that employs the Encoder-Decoder architecture with residual vector quantization (RVQ). It cleverly disentangles the layers of speech information, categorizing them into semantic and acoustic tokens to enhance the performance of large language models.

Installation

Before you can dive into using SpeechTokenizer, you must have Python (version 3.8) and a reasonably recent version of PyTorch installed. Here’s how you can do that:

- To install SpeechTokenizer directly, run:

pip install -U speechtokenizergit clone https://github.com/ZhangXInFDSpeechTokenizer.git

cd SpeechTokenizer

pip install .Usage

Once you’ve installed the SpeechTokenizer, you can start using it effectively by following these steps:

1. Model Storage

The model you will be using, speechtokenizer_hubert_avg, employs average representations across all HuBERT layers as semantic teachers.

2. Load the Model

- Let’s start by loading the model:

from speechtokenizer import SpeechTokenizer

config_path = 'path/config.json'

ckpt_path = 'path/SpeechTokenizer.pt'

model = SpeechTokenizer.load_from_checkpoint(config_path, ckpt_path)

model.eval()3. Extracting Discrete Representations

To get the discrete representations from an audio clip, follow these steps:

import torchaudio

import torch

# Load and pre-process speech waveform

wav, sr = torchaudio.load(SPEECH_FILE_PATH)

if sr != model.sample_rate:

wav = torchaudio.functional.resample(wav, sr, model.sample_rate)

wav = wav.unsqueeze(0)

# Extract discrete codes from SpeechTokenizer

with torch.no_grad():

codes = model.encode(wav) # codes: (n_q, B, T)

semantic_tokens = codes[0, :, :]

acoustic_tokens = codes[1:, :, :]4. Decoding Discrete Representations

To decode the extracted codes back into waveform format, you will use the following techniques:

wav = model.decode(codes[:(i + 1)]) # wav: (B, 1, T)

wav = model.decode(codes[i: (j + 1)], st=i) # to concatenate semantic and acoustic tokens

semantic_tokens = ... # (..., B, T)

acoustic_tokens = ... # (..., B, T)

wav = model.decode(torch.cat(semantic_tokens, acoustic_tokens, axis=0))Understanding the Code: An Analogy

Think of the SpeechTokenizer process like a chef preparing a gourmet dish. The chef (the model) starts with a variety of ingredients (discrete representations) that can be divided into primary items (semantic tokens) and supplementary items (acoustic tokens). When cooked (processed), these ingredients create a balanced meal (the decoded audio), where the primary and supplementary elements enhance each other, resulting in a final dish that is both flavorful and rich.

Troubleshooting

If you encounter issues during installation or usage, here are some troubleshooting tips:

- Ensure that your Python version is 3.8; newer versions may lead to compatibility issues.

- Make sure your PyTorch is updated and suitable for your system specifications.

- If you’re encountering errors while loading the model, verify that the paths to the config and checkpoint files are correct.

- If you experience audio output issues, check if the sample rates match.

- Check for proper shapes of input tensors when decoding.

For more insights, updates, or to collaborate on AI development projects, stay connected with **[fxis.ai](https://fxis.ai)**.

Conclusion

With the SpeechTokenizer, you’re now equipped to extract and manipulate speech features effectively. At **[fxis.ai](https://fxis.ai)**, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.