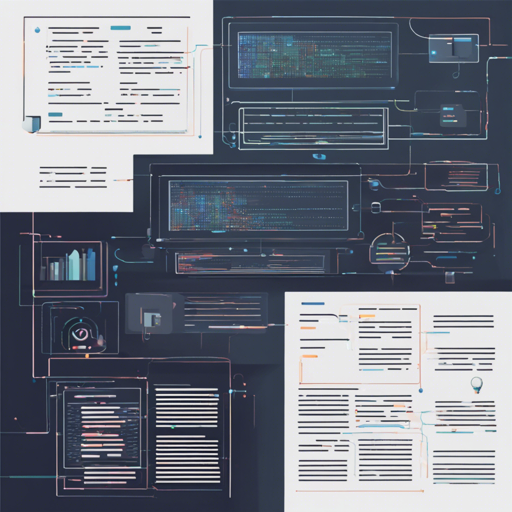

Welcome to the ultimate guide on utilizing the UniXcoder-base model for code representation! In this article, we will walk you through the steps of installing and utilizing this sophisticated cross-modal pre-trained model, developed by the Microsoft Team. You will learn to implement various tasks like code search, completion, and summarization using easily understandable instructions.

Model Details

UniXcoder is a powerful model that leverages multimodal data to enhance the process of understanding code. This calls into play the interaction between code comments and abstract syntax trees (ASTs), making it a vital tool for developers. If you need a quick rundown of essential details, here it is:

- Model type: Feature Engineering

- Language: English (NLP)

- License: Apache-2.0

- Parent Model: RoBERTa

- Related Paper: Associated Paper

Getting Started

Let’s dive into the setup process! First things first, you’ll need to install the necessary dependencies:

pip install torch

pip install transformersQuick Tour of UniXcoder

To harness the full power of UniXcoder, you’ll need to download the model and set up your environment. Here’s how you do it:

!wget https://raw.githubusercontent.com/microsoft/CodeBERT/master/UniXcoder/unixcoder.py

import torch

from unixcoder import UniXcoder

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = UniXcoder("microsoft/unixcoder-base")

model.to(device)Here’s an analogy to help you understand this process better: Think of UniXcoder as a chef in a high-tech kitchen. The first step involves gathering all the necessary ingredients (dependencies) and tools (code) required to whip up a delicious dish (in this case, code representation). Once you have everything, you can start cooking!

Different Modes of Operation

UniXcoder supports various modes for conducting tasks:

1. Encoder-only Mode

This mode is ideal for tasks like code search. You can use this mode for encoding a function:

# Encode a function

func = "def f(a,b): return max(a,b)"

tokens_ids = model.tokenize([func], max_length=512, mode="encoder-only")

source_ids = torch.tensor(tokens_ids).to(device)

tokens_embeddings, max_func_embedding = model(source_ids)In our kitchen analogy, this represents measuring out the ingredients you’ll need for a specific dish before cooking.

2. Decoder-only Mode

In this mode, you can complete code snippets. For example:

context = "def f(data,file_path):"

tokens_ids = model.tokenize([context], max_length=512, mode="decoder-only")

source_ids = torch.tensor(tokens_ids).to(device)

prediction_ids = model.generate(source_ids, decoder_only=True, beam_size=3, max_length=128)

predictions = model.decode(prediction_ids)

print(context + predictions[0][0])This is akin to the chef adding final touches to a dish for presentation.

3. Encoder-Decoder Mode

This includes tasks such as function name prediction and API recommendations:

context = "def mask0(data, file_path):"

tokens_ids = model.tokenize([context], max_length=512, mode="encoder-decoder")

source_ids = torch.tensor(tokens_ids).to(device)

prediction_ids = model.generate(source_ids, decoder_only=False, beam_size=3, max_length=128)

predictions = model.decode(prediction_ids)

print(predictions[0])Here, it’s like presenting the finished dish and receiving feedback on its taste, allowing adjustments for better outcomes.

Troubleshooting

If you encounter any issues while implementing UniXcoder, here are some troubleshooting tips:

- Ensure all dependencies are correctly installed; re-run the installation commands if necessary.

- Check your device compatibility. If you are having issues with CUDA, ensure that your GPU drivers are up to date.

- Make sure that the model is correctly referenced and that you’re using the right version. Verify this by checking the model’s official repository.

- For further assistance, you can explore community forums or consult the [documentation from Hugging Face](https://huggingface.co/docs). Check online resources for common pitfalls.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.