Welcome to the world of DeepSeek Coder! This powerful tool combines the best of machine learning and coding to offer a state-of-the-art code completion experience. Let’s delve into how you can harness this technology to enhance your programming capabilities.

1. An Introduction to DeepSeek Coder

DeepSeek Coder is a series of state-of-the-art code language models, each trained from scratch on a whopping 2 trillion tokens! With a mix of 87% code and 13% natural language data, available in both English and Chinese, it excels in various programming tasks. Whether you are a seasoned developer or a beginner, you’ll find this model flexible with options ranging from 1.3B to 33B parameters, catering to your specific needs.

2. Model Summary

The highlight of this suite is the deepseek-coder-1.3b-instruct model, which is initialized from the base model and fine-tuned on 2 billion tokens of instruction data, ensuring a rich understanding of coding tasks.

- Home Page: DeepSeek

- Repository: deepseek-ai/deepseek-coder

- Chat With DeepSeek Coder: DeepSeek-Coder

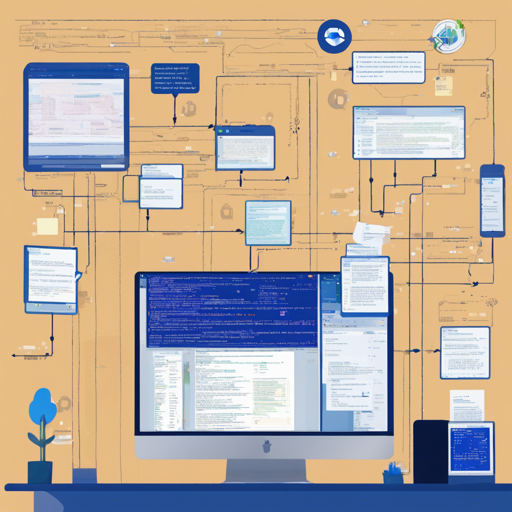

3. How to Use DeepSeek Coder

Now, let’s explore how to implement this model in your coding projects. We’ll use a Python example for a straightforward chat model inference.

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("deepseek-ai/deepseek-coder-1.3b-instruct", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("deepseek-ai/deepseek-coder-1.3b-instruct", trust_remote_code=True, torch_dtype=torch.bfloat16).cuda()

messages = [

{ 'role': 'user', 'content': "write a quick sort algorithm in python."}

]

inputs = tokenizer.apply_chat_template(messages, add_generation_prompt=True, return_tensors="pt").to(model.device)

outputs = model.generate(inputs, max_new_tokens=512, do_sample=False, top_k=50, top_p=0.95, num_return_sequences=1, eos_token_id=tokenizer.eos_token_id)

print(tokenizer.decode(outputs[0][len(inputs[0]):], skip_special_tokens=True))4. Explanation with an Analogy

Imagine you’re in a busy restaurant (your coding environment) where your server is your AI model. You place an order (your requests for code assistance), and based on prior dining experience (the 2 trillion tokens of training), the server is ready to offer you one of the best meals (code snippets) available. Just like the server can adapt to various cuisines (programming languages), the DeepSeek Coder can cater to numerous code languages and tasks, serving you the perfect dish each time you ask.

5. Troubleshooting Tips

If you encounter obstacles while using DeepSeek Coder, here are a few troubleshooting steps:

- Issue with Model Download: Ensure your internet connection is stable. If problems persist, try re-downloading the model.

- Performance Issues: Check if your machine has sufficient resources (like RAM and GPU). Sometimes, a quick restart fixes the lag.

- Tokenization Errors: Verify that the messages variable is defined accurately. Watch out for syntax issues!

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

6. License Information

The DeepSeek Coder code repository is licensed under the MIT License. Feel free to use it commercially while complying with the Model License. For details, check the LICENSE-MODEL.

7. Final Thoughts

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.