In the realm of artificial intelligence, models that integrate both vision and language capabilities open up a plethora of possibilities. One such model, InternVL-Chat-ViT-6B, leverages the power of vision and text to transform how we interact with information. This article will guide you on how to effectively run this model, alongside troubleshooting tips to enhance your experience.

What is InternVL?

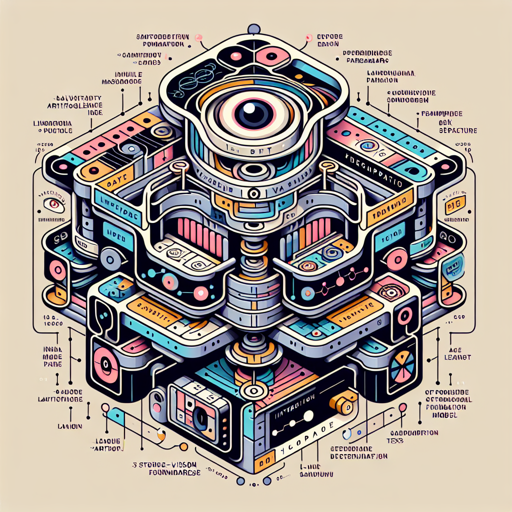

InternVL is an advanced model that enhances the Vision Transformer (ViT) architecture by incorporating a remarkable 6 billion parameters. It has been trained on a diverse range of publicly available image-text pairs sourced from various datasets, including LAION-en, LAION-multi, and COYO, to name a few. This model stands as the largest open-source vision-language foundation model with 14 billion parameters and boasts 32 state-of-the-art performances in tasks such as visual perception and multimodal dialogue.

How to Run InternVL?

To kickstart your journey with InternVL, follow these steps:

- Visit the project on GitHub.

- Refer to the comprehensive README document for installation guidelines and usage details.

- Keep in mind that most procedures will rely on the updated documentation provided alongside the original instructions.

Understanding the Core Model Details

InternVL-Chat isn’t just any chatbot; it’s based on fine-tuning the LLaMA-Vicuna on multimodal instruction-following data. Think of it like assembling a complex puzzle; each piece (a dataset) comes together seamlessly to create a coherent picture. The model not only processes language but enables rich, visual interactions, making it a valuable tool for both researchers and hobbyists.

Data and Training Insights

This model is finely tuned with:

- 558K filtered image-text pairs from LAIONCC and SBU.

- 158K GPT-generated multimodal instruction-following data.

- A mix of academic-task-oriented VQA data totaling 450K.

- 40K ShareGPT data for enhanced learning.

Intended Use and Audience

The primary users of InternVL-Chat include researchers and enthusiasts focused on computer vision, natural language processing, machine learning, and AI. Its capabilities extend beyond mere research; it serves as an exploration tool for innovative dialogue systems.

Troubleshooting Tips

If you encounter any issues while using InternVL-Chat, consider the following steps:

- Ensure that you are using compatible Python versions and dependencies as stated in the README document.

- If the model doesn’t run as expected, check for error messages and consult the GitHub issues page for solutions.

- For any unresolvable queries, engage with the community on GitHub to seek advice.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.