Are you ready to explore the incredible world of model quantization using llama.cpp? Today, we’ll dive into how to quantify the capabilities of the DeepSeek-Coder-V2-Lite-Instruct model. Buckle up as we make this complex process as straightforward as possible!

Understanding Quantization

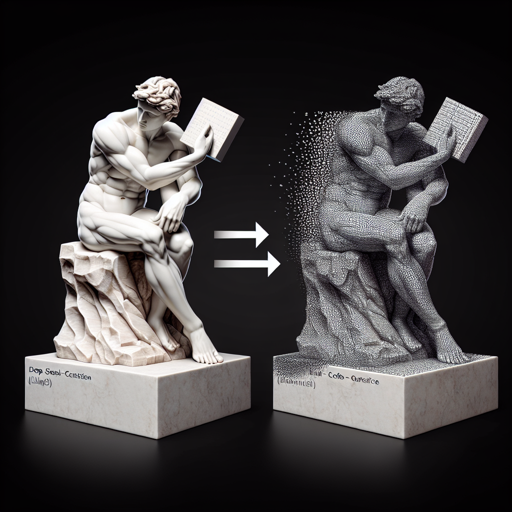

Quantization reduces the precision of the weights and activations in a neural network, thereby allowing the model to run faster and consume less memory. Think of it as converting a beautifully crafted, heavy marble sculpture into a lightweight, yet still strikingly beautiful stone replica. While the details might be slightly less defined, the essence remains intact!

Setting Up Your Environment

Before jumping into quantizing the model, ensure you have the necessary tools. You can use the llama.cpp library version b3166 for this task.

Prompt Format

The prompt format is crucial for interacting with the model. Please follow the template below:

<|begin▁of▁sentence|>{system_prompt}User: {prompt}Assistant: <|end▁of▁sentence|>Assistant:Downloading Model Files

Now, let’s look at how to download the quantized files. Below are several options for the DeepSeek-Coder-V2-Lite-Instruct model:

| Filename | Quant type | File Size | Description |

|---|---|---|---|

| DeepSeek-Coder-V2-Lite-Instruct-Q8_0_L.gguf | Q8_0_L | 17.09GB | Experimental, uses f16 for embed and output weights. |

| DeepSeek-Coder-V2-Lite-Instruct-Q8_0.gguf | Q8_0 | 16.70GB | Extremely high quality, generally unneeded but max available quant. |

| DeepSeek-Coder-V2-Lite-Instruct-Q6_K_L.gguf | Q6_K_L | 14.56GB | Experimental, recommended for a very high quality. |

| DeepSeek-Coder-V2-Lite-Instruct-Q6_K.gguf | Q6_K | 14.06GB | Recommended high-quality quant. |

| DeepSeek-Coder-V2-Lite-Instruct-Q5_K_L.gguf | Q5_K_L | 12.37GB | Experimental, feedback appreciated. |

Using huggingface-cli for Downloads

If you prefer command-line operations, here’s how you can leverage the huggingface-cli:

pip install -U "huggingface_hub[cli]"Now, download the specific file you wish to target by running:

huggingface-cli download bartowski/DeepSeek-Coder-V2-Lite-Instruct-GGUF --include "DeepSeek-Coder-V2-Lite-Instruct-Q4_K_M.gguf" --local-dir ./Which File Should You Choose?

Choosing the right file is essential for optimal performance. Here’s a quick guide:

- If you want fast operation, select a quant smaller by 1-2GB than your GPU’s VRAM.

- For the highest quality, look for a quant that’s 1-2GB smaller than the sum of your system RAM and GPU VRAM.

- For less complexity, select a K-quant which follows the naming pattern ‘QX_K_X’.

- If you’re aiming for performance, opt for I-quants with ‘IQX_X’ naming.

Troubleshooting

If you encounter any issues during the process, here are some common troubleshooting tips:

- Ensure that your system meets the necessary RAM/VRAM requirements for the chosen quant model.

- Double-check that you’ve installed all dependencies correctly, including huggingface-cli.

- If a download fails, try again, ensuring your internet connection is stable.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

By understanding the principles of quantization and following this guide, you can effectively utilize the llama.cpp library to enhance the DeepSeek-Coder-V2-Lite-Instruct model. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.