Welcome to the exciting world of AI with Octopus-V2, a project from Nexa AI that harnesses the power of on-device language models for function calling, specifically designed for Android APIs. In this article, we’ll explore how to effectively implement this powerful tool, troubleshoot common issues, and appreciate its capabilities in enhancing artificial intelligence applications.

What is Octopus-V2?

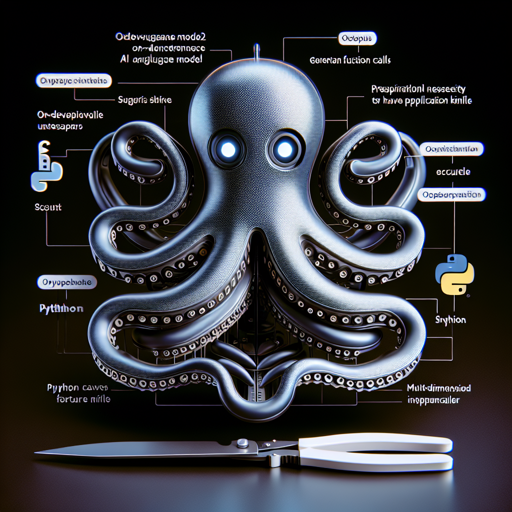

Octopus-V2-2B is an advanced, open-source language model equipped with 2 billion parameters. It excels in generating function calls efficiently and accurately, especially for mobile applications. Think of it as a Swiss Army knife for developers—compact yet packed with tools that make crafting intelligent applications simpler and faster.

Getting Started with Octopus-V2

To start using Octopus-V2, you will need to have Python installed along with the necessary libraries. Follow the steps below to set up and run the model on a GPU:

- Install the required libraries

- Use the following Python code snippet to initiate inference:

pip install transformers torchfrom transformers import AutoTokenizer, GemmaForCausalLM

import torch

import time

def inference(input_text):

start_time = time.time()

input_ids = tokenizer(input_text, return_tensors="pt").to(model.device)

input_length = input_ids["input_ids"].shape[1]

outputs = model.generate(

input_ids=input_ids["input_ids"],

max_length=1024,

do_sample=False)

generated_sequence = outputs[:, input_length:].tolist()

res = tokenizer.decode(generated_sequence[0])

end_time = time.time()

return {"output": res, "latency": end_time - start_time}

model_id = "NexaAIDev/Octopus-v2"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = GemmaForCausalLM.from_pretrained(

model_id, torch_dtype=torch.bfloat16, device_map="auto")

input_text = "Take a selfie for me with front camera"

nexa_query = f"Below is the query from the users, please call the correct function and generate the parameters to call the function.\n\nQuery: {input_text} \n\nResponse:"

print("nexa model result:\n", inference(nexa_query))Understanding the Code with an Analogy

Imagine Octopus-V2 as a highly trained chef in a kitchen (your Android device). The ingredients (input_text) you give the chef dictate what dish (function call) will be prepared. The chef organizes these ingredients (input_ids) and starts cooking (inference) based on specific recipes (model.generate function). Each dish has unique flavors and serving styles (output formatting), and the chef works quickly to ensure everything is served on time (latency). By ensuring your kitchen is equipped with the right tools (libraries and frameworks), your chef can perform splendidly.

Troubleshooting Common Issues

As with any innovative technology, users may encounter issues during implementation. Here are some common troubleshooting tips:

- Installation issues: Ensure that you have the correct versions of Python and the necessary libraries. Sometimes, updating libraries can resolve conflicts.

- Model not loading: Check your internet connection and ensure that the model ID used in the code is correct.

- Slow performance: Ensure your device meets the hardware requirements. Running the model on a high-performance GPU can vastly improve inference speed.

- Inaccurate or failed outputs: Make sure your input query is clear and includes all parameters needed for function calls.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

With Octopus-V2-2B, developers have a remarkable tool to implement advanced AI functionalities in mobile applications. Following the steps outlined in this guide will help you harness its power effectively.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.