The all-MiniLM-L12-v2 model is a powerful tool for understanding and computing sentence similarity. Developed by Sentence Transformers, this model uses the latest advancements in natural language processing to represent sentences as vectors, enabling nuanced comparisons based on their semantic meaning. In this blog post, we’ll walk you through how to use this exceptional model effectively, troubleshoot common issues, and explore its available datasets.

Understanding the Model

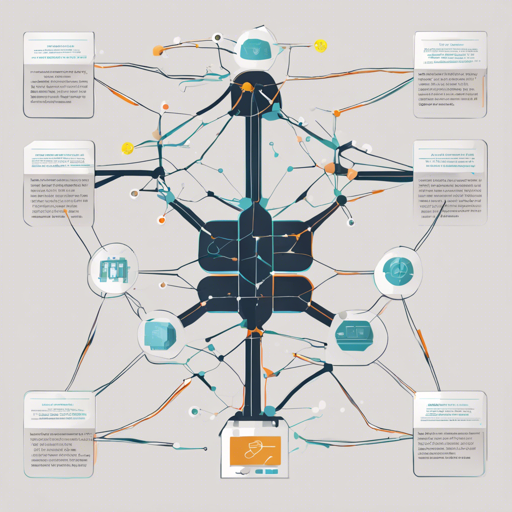

Think of the all-MiniLM-L12-v2 model like a skilled translator who understands multiple languages and can discern subtle meanings in phrases. Just like this translator would convert sentences from one language to another while preserving their intended message, the all-MiniLM-L12-v2 converts sentences into numerical representations (embeddings) that reflect their semantic content. This allows us to compare and measure how similar two or more sentences are to one another. The model achieves this using a process called vectorization, where sentences are mapped to points in high-dimensional space.

Using the Model

Here’s how you can get started with the all-MiniLM-L12-v2 model:

- Step 1: Install the required libraries, particularly Sentence Transformers.

- Step 2: Load the model into your Python environment:

from sentence_transformers import SentenceTransformer

model = SentenceTransformer('all-MiniLM-L12-v2')sentences = ["This is a sentence.", "This is another sentence."]

embeddings = model.encode(sentences)from sklearn.metrics.pairwise import cosine_similarity

similarity = cosine_similarity(embeddings)Troubleshooting

If you encounter any issues while using the all-MiniLM-L12-v2 model, here are some troubleshooting tips:

- Issue 1: Model not loading – Ensure you have the required libraries installed and try restarting your environment.

- Issue 2: Inaccurate similarity results – Check that your sentences are properly formatted and clarify any ambiguous language.

- Issue 3: Memory errors – Consider using a smaller batch of sentences for encoding to fit your resources better.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Datasets Utilized by the Model

This model is trained on a variety of datasets, enhancing its ability to understand semantic nuances. These datasets include:

- s2orc

- flax-sentence-embeddings

- StackExchange XML

- MS MARCO

- Gooaq

- Yahoo Answers Topics

- Code Search Net

- Search QA

- ELI5

- SNLI

- Multi-NLI

- WikiHow

- Natural Questions

- Trivia QA

- Flickr30k Captions

- Simple Wiki

- QQP

- SPECTER

- PAQ Pairs

- WikiAnswers

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.