The Erebus Neural Samir-7B model introduces a powerful tool for those venturing into AI. With its various quantized formats and easy accessibility, understanding how to harness this model can boost your projects’ effectiveness and efficiency. This guide will walk you through the steps to utilize the model, troubleshoot issues, and enhance your AI development experience.

Understanding the Model and Its Quantized Variants

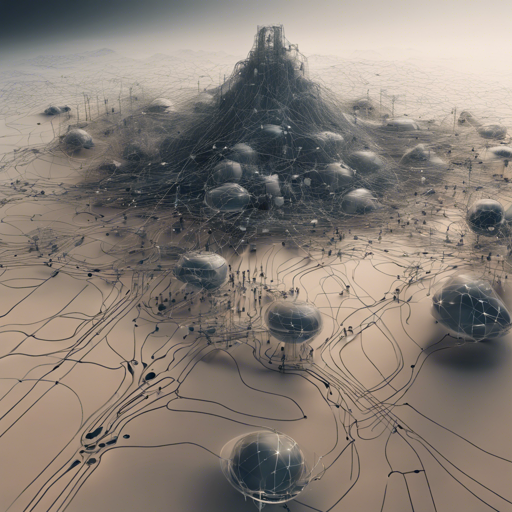

The Erebus Neural Samir-7B model comes in several quantized formats, making it flexible for different applications. Think of quantization as packing suitcases (models) for a journey (your projects). Each suitcase may vary in size and packing quality, just as each quant variant has a different file size and associated quality. Below are some quant variants explained:

- GGUF: The Generalized Graph Uncertainty Format aims for broader adaptability in models. For example, you might encounter variants like Q2_K or IQ3_S, each tailored for specific needs.

- IQ-quants: These are often preferable for quality and performance compared to their regular-sized counterparts.

When choosing a quant, consider what you prioritize: size or quality. Smaller sizes may load faster, but might compromise on quality.

Getting Started with Erebus Neural Samir-7B

To effectively utilize the model, follow these steps:

- Download the Model: Navigate to the quant links provided in the README. For example, you can access models like the Q2_K variant or the IQ3_XS variant.

- Refer to the Documentation: If you’re unsure how to work with GGUF files, refer to the detailed guidelines provided in The Blokes README.

- Experiment and Test: Once downloaded, load the model into your environment, and start testing it with your data.

Troubleshooting Common Issues

While working with the Erebus Neural Samir-7B model, you might encounter some challenges. Here are some common troubleshooting steps:

- Model Loading Errors: If you experience errors loading GGUF files, ensure that you have sufficient memory allocated. Sometimes, a model can be larger than expected, just like underestimating the weight of a suitcase.

- Performance Issues: When encountering slow responses, consider switching to a lighter quant version. For instance, IQ variants generally offer better performance without sacrificing too much quality.

- Missing Files: If certain quantized variants don’t appear after a week, it’s possible they weren’t planned. You can request to include them by opening a Community Discussion.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

With the Erebus Neural Samir-7B model, you’re well-equipped to tackle a variety of AI tasks. By understanding the quantization process and following the steps outlined above, you can effectively implement this model in your projects. Remember, don’t hesitate to reach out for help or clarification as you navigate through this exciting field!

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.