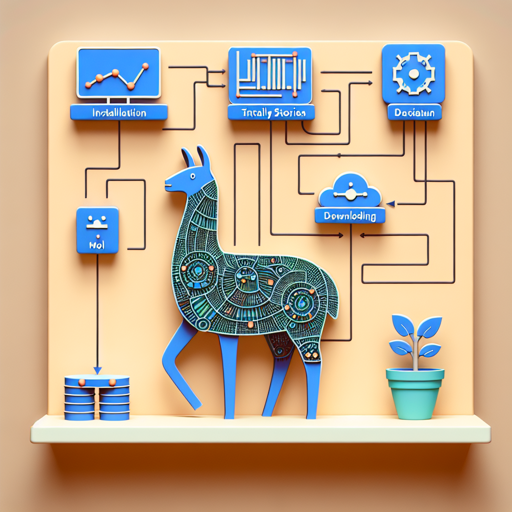

In this blog post, we’re diving into the fascinating world of the Llama 2 architecture model series, specially trained on the TinyStories dataset. This powerful tool is intended for use in the llama2.c project. Let’s take a step-by-step approach to understand how to effectively work with this model series.

Getting Started with Llama 2

To begin your journey with the Llama 2 architecture, follow these steps:

- Installation: Ensure that you have the necessary libraries and dependencies installed on your system. This includes any machine-learning frameworks required for model training and inference.

- Download the Model: Fetch the Llama 2 model files, carefully packaged in the repository, from the respective platform.

- Load the Model: Using the appropriate code snippets, load the model into your programming environment. This might involve using functions provided in the llama2.c library.

- Test the Model: Run tests with sample data from the TinyStories dataset to check the performance and output of the model.

- Integration: Integrate the model into your projects, tweaking and optimizing it according to your specific requirements.

Code Explanation: An Analogy

Imagine you’re a chef preparing a gourmet meal. You gather all your ingredients (the Llama 2 model files and TinyStories dataset), each with a unique flavor and texture. You might select various cooking methods (functions) to mix and match ingredients flawlessly. Just as a recipe guides a chef on how to put those ingredients together, the code helps you functionally implement and manipulate the model for desired outputs.

Troubleshooting Common Issues

As with any project, you might encounter some bumps along the way. Here are a few troubleshooting ideas:

- Model Not Loading: If the model fails to load, ensure that all dependencies are correctly installed and compatible with your environment.

- Unexpected Outputs: If your outputs are not what you expect, it’s a good idea to double-check your input data for potential issues.

- Memory Issues: Running out of RAM can be a common problem, especially when handling large datasets. Consider optimizing your code or using hardware with higher specifications.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

A Future with Llama 2

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.