The MPT-7B model, a decoder-style transformer pretrained on a whopping 1 trillion tokens of English text and code, is paving the way for advanced language processing. Developed by MosaicML, this model optimizes efficiency and performance through innovations in its architecture. In this article, we will walk you through the steps to use the MPT-7B model effectively, along with some troubleshooting tips for a smooth experience.

Understanding the MPT-7B Model

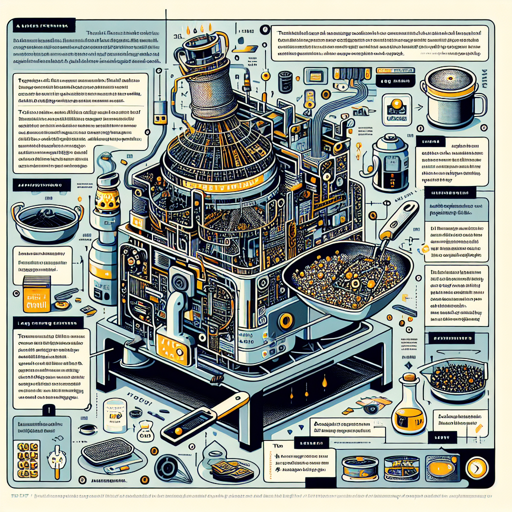

Imagine trying to cook a gourmet dish using complicated ingredients and an outdated recipe. Now envision a sophisticated cooking setup with modern cookware and a streamlined recipe — that’s what MPT-7B does for large language tasks. Traditional language models often struggle with scalability and long inputs, but MPT-7B embraces these challenges with some spectacular design tweaks.

Key Features:

- Trained on an extensive dataset of 1 trillion tokens, outpacing competitors.

- Uses ALiBi (Attention with Linear Biases) which allows handling long inputs without positional limitations.

- Optimized for fast training and inference through FlashAttention and other innovative techniques.

With these modifications, MPT-7B stands out as a robust tool for both training and inference, suitable for various applications.

How to Get Started with MPT-7B

To harness the power of MPT-7B, follow these steps:

- Install Necessary Packages: Make sure you have the necessary packages installed, specifically the

transformerslibrary from Hugging Face. - Load the Model:

Here’s a snippet of code to load the MPT-7B model:

import transformers # Load model with trust_remote_code model = transformers.AutoModelForCausalLM.from_pretrained( "mosaicml/mpt-7b", trust_remote_code=True ) - Utilizing Advanced Options:

You can further customize the model’s performance using configurations:

config = transformers.AutoConfig.from_pretrained("mosaicml/mpt-7b", trust_remote_code=True) config.attn_config['attn_impl'] = 'triton' config.init_device = 'cuda:0' # Fast initialization on GPU model = transformers.AutoModelForCausalLM.from_pretrained( "mosaicml/mpt-7b", config=config, torch_dtype=torch.bfloat16, trust_remote_code=True ) - Generate Text: Finally, use the text-generation pipeline as follows:

from transformers import pipeline

# Set up the pipeline

pipe = pipeline("text-generation", model=model, tokenizer="EleutherAI/gpt-neox-20b", device=0)

# Generate text

with torch.autocast("cuda", dtype=torch.bfloat16):

print(pipe("Here is a recipe for vegan banana bread:", max_new_tokens=100, do_sample=True, use_cache=True))

Troubleshooting Tips

If you encounter issues while using the MPT-7B model, consider the following troubleshooting ideas:

- Check Dependencies: Ensure all required libraries, like transformers and torch, are installed and updated.

- GPU Configuration: Verify that your GPU setup is compatible and actively working for better performance.

- Memory Issues: If you run into out-of-memory errors, try reducing the batch size or using gradient checkpointing.

- Trust Remote Code: Remember to set

trust_remote_code=Truewhen loading the model to avoid issues with custom implementations.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

The MPT-7B model presents an exciting opportunity for developers and researchers in the field of language processing. Its efficient architecture and capabilities make it a top choice for many applications. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.