If you’re diving into the world of natural language processing, you might have heard of the NorT5 language models. Recently, the official release of the NorT5 language models has generated buzz, especially with its comprehensive paper, NorBench — A Benchmark for Norwegian Language Models. Let’s explore how you can effectively use these powerful models in your own projects!

What is NorT5?

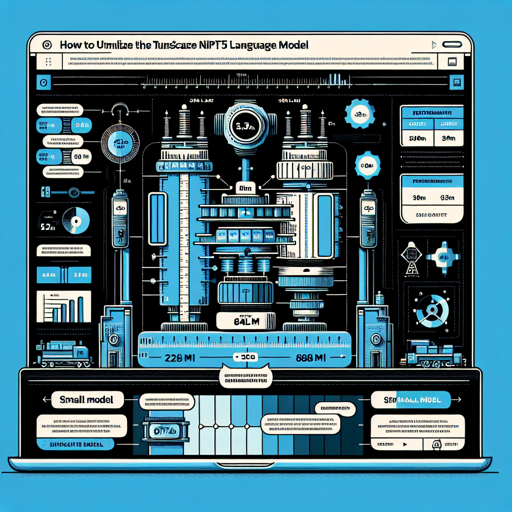

NorT5 is a family of transformer-based models designed specifically for processing Norwegian language data. This includes a variety of sizes, from small models with 32 million parameters to larger models with a whopping 808 million parameters. Each size offers a different balance of performance and resource consumption.

Different Sizes of NorT5 Models

How to Use NorT5

Using the NorT5 model requires a few key steps. You can imagine it like planting a tree. First, you need to prepare the soil (the environment), then you plant the seed (load the model), and finally, you nurture it (run your operations) to see it grow (get outputs).

import torch

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained('tgnort5-large')

model = AutoModelForSeq2SeqLM.from_pretrained('tgnort5-large', trust_remote_code=True)

# MASKED LANGUAGE MODELING

sentence = 'Brukseksempel: Elektrisk oppvarming. Definisjonen på ordet oppvarming er[MASK_0].'

encoding = tokenizer(sentence)

input_tensor = torch.tensor([encoding.input_ids])

output_tensor = model.generate(input_tensor, decoder_start_token_id=7, eos_token_id=8)

result = tokenizer.decode(output_tensor.squeeze(), skip_special_tokens=True)

# This should output: 'å varme opp'

Understanding the Code

In the code snippet above, think of import torch and from transformers import … as unpacking your toolbox before you start building. You’re pulling in libraries that provide you tools to interact with the NorT5 model.

The line tokenizer = AutoTokenizer.from_pretrained('tgnort5-large') is akin to choosing the right gardening tools. In this scenario, the tokenizer splits your input into understandable parts, much like how garden tools help you prepare soil for planting.

Once you feed the model input (the data), it produces an output based on its learning, just like a tree yielding fruit based on how well it has been nurtured. If all goes well, your expected output for the sample sentence should be: ‘å varme opp’.

Example Usage for Prefix Language Modeling

Now let’s say you also want to explore prefix language modeling. This is where you can finetune models for specific tasks. Imagine this as training your tree to yield a specific type of fruit!

# PREFIX LANGUAGE MODELING

sentence = 'Brukseksempel: Elektrisk oppvarming. Definisjonen på ordet oppvarming er (Wikipedia)'

encoding = tokenizer(sentence)

input_tensor = torch.tensor([encoding.input_ids])

output_tensor = model.generate(input_tensor, max_new_tokens=50, num_beams=4, do_sample=False)

result = tokenizer.decode(output_tensor.squeeze())

# Expected output will flow as: '[BOS]ˈoppvarming ...'

Troubleshooting Tips

If you encounter any issues while using NorT5, consider the following troubleshooting steps:

- Ensure your environment meets the necessary dependencies, especially the

transformerslibrary. - Check that you have the correct model name and available configurations.

- If you’re experiencing slow performance, consider using smaller models like NorT5 xs or small.

- Make sure you are running with the correct arguments set, such as

trust_remote_code=True.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.