The ProSparse-LLaMA-2-13B model has introduced a breakthrough methodology in enhancing intrinsic activation sparsity within large language models (LLMs). With this guide, you’ll understand how to effectively utilize this innovative model and troubleshoot any issues you may encounter. Let’s dive in!

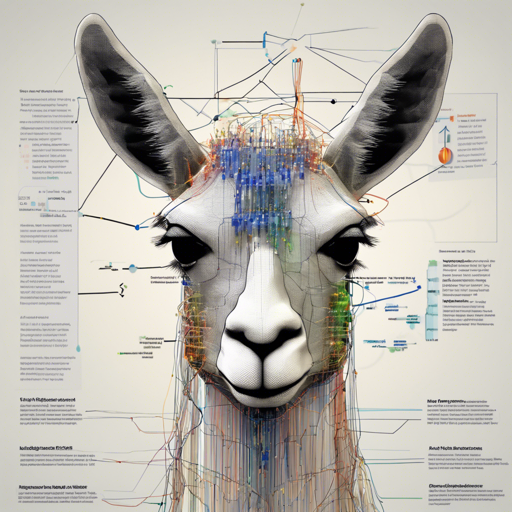

Understanding the ProSparse-LLaMA-2-13B Model

The ProSparse approach leverages activation sparsity to accelerate inference processes in large language models. Imagine a busy highway where certain lanes are perpetually congested while others remain free. The ProSparse methodology identifies the less-used “lanes” (or weakly-contributed parameters) and optimally allocates resources to improve overall traffic flow (inference speed). It achieves an impressive average sparsity of up to 89.32% without sacrificing performance when fine-tuning models like LLaMA2.

Getting Started with ProSparse-LLaMA-2-13B

The crux of leveraging the ProSparse model lies in following a series of steps detailed below:

- Activation Function Substitution: Replace the conventional activation function in Fully Connected Networks (FFNs) with ReLU and initiate continual training.

- Progressive Sparsity Regularization: Optimize the model to manage the prediction loss and a specific L1 loss to enhance sparsity gradually.

- Activation Threshold Shifting: Utilize FATReLU, a refined ReLU variant, allowing for pruning weakly-contributed elements further boosting the model’s sparsity.

The training process happens on powerful GPUs (32 A100s) to ensure efficiency throughout the model training stages.

Evaluation Metrics

To evaluate the model’s efficacy, certain metrics are utilized including:

- Code Generation Performance (e.g., HumanEval)

- Commonsense Reasoning (e.g., HellaSwag)

- Reading Comprehension Accuracy (e.g., BoolQ)

- Other Benchmarks (e.g., GSM8K)

Simplified Steps for Fine-Tuning and Evaluation

Follow these outlined steps to implement the evaluation accurately:

- Adapt your evaluation framework to the specifics of ProSparse-LLaMA, keeping in mind any necessary adjustments such as cls tokens.

- Execute evaluation with the UltraEval framework for reliable results, as other frameworks may lead to discrepancies.

Troubleshooting Common Issues

Here are some common issues you may encounter along with their solutions:

- Inconsistent Results: This can arise from using non-compatible evaluation frameworks. Always opt for UltraEval for consistent performance metrics.

- Activation Predictor Errors: Ensure activation predictors are accurately implemented, as they play a vital role in inference speed and accuracy.

- General Setup Challenges: Double-check your environment settings and necessary components such as environment variables and file replacements.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Final Thoughts

By adopting the ProSparse methodology in LLaMA-2-13B, you’re not just following the trend; you’re setting the stage for faster, more efficient language models. As you continue exploring this technology, remember that with the right approaches and troubleshooting strategies, you’ll pave your way to success.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.