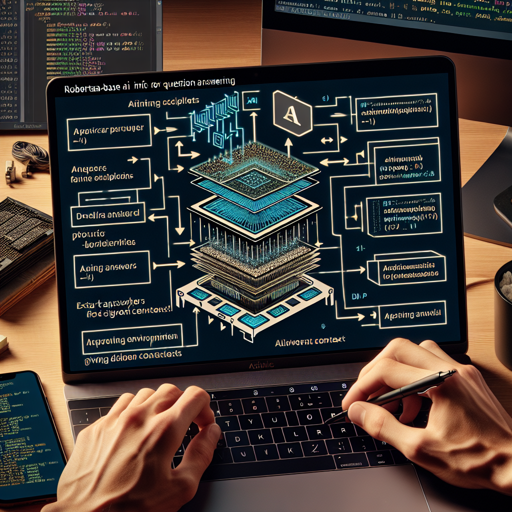

If you’re diving into the realm of natural language processing (NLP) and looking to harness the capabilities of the roberta-base model for Question Answering, you’re in the right place! This guide will walk you through the steps to effectively implement this model and troubleshoot any potential hiccups along the way.

Understanding the roberta-base Model

The roberta-base model is a powerful tool, fine-tuned using the SQuAD 2.0 dataset, which includes question-answer pairs, including unanswerable questions. It excels at extractive question answering, meaning it can pull answers directly from a given context.

Setting Up Your Environment

- Install the necessary libraries:

pip install transformerspip install haystack

- Ensure you have a compatible version of Python installed (3.6 or above).

- Set up infrastructure that meets the requirements (e.g., 4x Tesla v100 GPUs).

How to Implement the Model

Let’s break down the implementation process with some easy steps. Think of this task like building your dream sandwich using a special recipe.

Step 1: Building Your Sandwich (Loading the Model)

To get the perfect sandwich started, you need to collect the right ingredients. In this case, that means loading the model:

from transformers import AutoModelForQuestionAnswering, AutoTokenizer, pipeline

model_name = "deepset/roberta-base-squad2"

# Load the pipeline

nlp = pipeline('question-answering', model=model_name, tokenizer=model_name)Step 2: Preparing the Ingredients (Context and Questions)

Just like preparing fresh veggies and meats for your sandwich, you need to prepare your context and question:

QA_input = {

'question': 'Why is model conversion important?',

'context': 'The option to convert models between FARM and transformers gives freedom to the user and let people easily switch between frameworks.'

}Step 3: Serving Your Sandwich (Getting Predictions)

Now that your sandwich is prepped, it’s time to take a bite—get your predictions!

res = nlp(QA_input)

print(res)Performance Metrics

When you bake your sandwich, you might want to know how it turned out. The same goes for evaluating your model’s performance, which includes metrics such as:

- Exact Match: Percentage of answers that exactly match the ground truth.

- F1 Score: The balance between precision and recall in predictions.

Troubleshooting

If you run into issues, here are a few tips to consider:

- Ensure you have sufficient computational resources; running the model requires robust hardware.

- If you encounter errors when loading the model, double-check the model name and configuration. The name needs to be correctly specified as

"deepset/roberta-base-squad2". - In case the predictions are not as expected, review your context input. It should be clear and concise, as vague input may lead to incorrect answers.

For more insights, updates, or to collaborate on AI development projects, stay connected with **fxis.ai**.

Using a Distilled Model

If performance is crucial, you might want to consider using a distilled version. The tinyroberta-squad2 runs at double the speed while maintaining comparable prediction quality.

Conclusion

And there you have it! With these simple steps, you’re well-equipped to implement the roberta-base model for question answering. Whether you’re refining your application or exploring NLP capabilities, this powerful tool will assist you in providing meaningful responses to queries.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.