Welcome to the expansive world of UPSNet! This guide will navigate you through the necessary steps to effectively set up and utilize the UPSNet for your panoptic segmentation tasks. We aim to make this process as straightforward as possible, ensuring a smooth experience whether you’re a veteran in machine learning or just getting started.

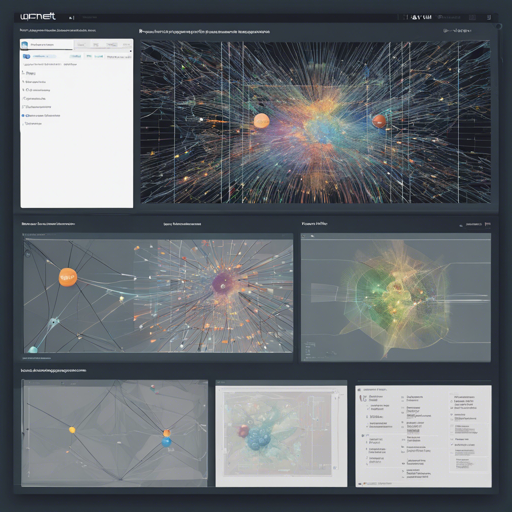

Introduction to UPSNet

UPSNet, introduced in a CVPR 2019 paper, is a cutting-edge neural network designed for panoptic segmentation—a task that combines instance segmentation and semantic segmentation. This powerful architecture is capable of distinguishing between different object instances as well as categorizing them.

Requirements for UPSNet

Before you dive in, ensure you have the appropriate setup:

Software Requirements

- Python 3.6 (also compatible with 2.7)

- PyTorch 0.4.1 or later (1.0 recommended)

- Anaconda3, which includes many common packages

Hardware Requirements

- 4 to 16 GPUs with at least 11GB memory for optimal training

Installation Steps

Follow these steps to get your UPSNet environment up and running:

- Clone the UPSNet repository to your local machine.

- Run

init.shto build essential C++ CUDA modules and download the pretrained model. - For Cityscapes:

- Ensure the Cityscapes dataset is downloaded and

TrainIdslabel images are available at$CITYSCAPES_ROOT. - Create a soft link by running:

ln -s $CITYSCAPES_ROOT data/cityscapesunder the UPSNet root directory. - Run

init_cityscapes.shto prepare the Cityscapes dataset for UPSNet.

- Ensure the Cityscapes dataset is downloaded and

- For COCO:

- Ensure the COCO dataset is downloaded with annotations and images at

$COCO_ROOT. - Create a soft link by running:

ln -s $COCO_ROOT data/cocounder the UPSNet root directory. - Run

init_coco.shto prepare the COCO dataset for UPSNet.

- Ensure the COCO dataset is downloaded with annotations and images at

Training and Testing UPSNet

Once your setup is complete, you can start training UPSNet:

python upsnet/upsnet_end2end_train.py --cfg upsnet/experiments/$EXP.yamlTo test the trained model:

python upsnet/upsnet_end2end_test.py --cfg upsnet/experiments/$EXP.yamlUsing Model Weights

The model weights used to reproduce results in the UPSNet paper are available. Follow these steps:

- Run

download_weights.shto get trained model weights for Cityscapes and COCO. - For testing on Cityscapes:

python upsnet/upsnet_end2end_test.py --cfg upsnet/experiments/upsnet_resnet50_cityscapes_16gpu.yaml --weight_path .model/upsnet_resnet_50_cityscapes_12000.pthpython upsnet/upsnet_end2end_test.py --cfg upsnet/experiments/upsnet_resnet101_cityscapes_w_coco_16gpu.yaml --weight_path .model/upsnet_resnet_101_cityscapes_w_coco_3000.pth - For testing on COCO:

python upsnet/upsnet_end2end_test.py --cfg upsnet/experiments/upsnet_resnet50_coco_16gpu.yaml --weight_path model/upsnet_resnet_50_coco_90000.pthpython upsnet/upsnet_end2end_test.py --cfg upsnet/experiments/upsnet_resnet101_dcn_coco_3x_16gpu.yaml --weight_path model/upsnet_resnet_101_dcn_coco_270000.pth

Troubleshooting Common Issues

Even the best systems encounter bumps in the road. Here are some common issues and how to resolve them:

- Error: Out of Memory – If your GPU runs out of memory, try reducing the batch size in your configuration file.

- Error: CUDA Not Found – Ensure you have the correct version of CUDA installed that is compatible with your PyTorch version. Using Anaconda can simplify package management.

- Error: Dataset Not Found – Double-check that you have correctly set the paths to your COCO and Cityscapes datasets and created the soft links.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.