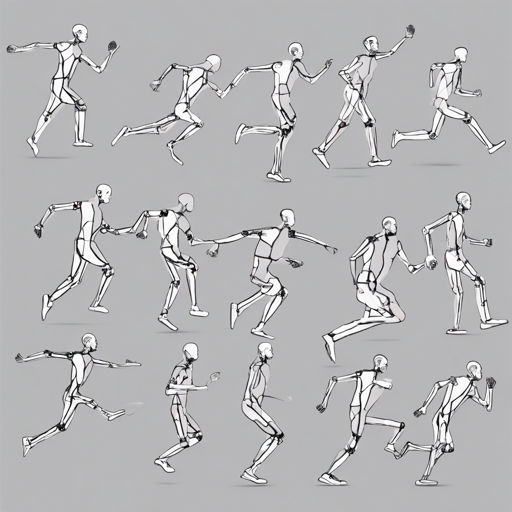

Have you ever wondered how computers understand human actions and poses? With the rapid advancement in artificial intelligence, the quest for effective human action classification through pose estimation has become a hot topic. In this blog, we’ll delve into the process and get you started on building your project using the OpenPose implementation integrated with TensorFlow.

Getting Started: Dependencies

Before diving into the coding world, you’ll need to ensure you have the right tools to work with. Here are the prerequisites:

- Python 3

- TensorFlow (preferably GPU version 1.13.0 for better performance)

- OpenCV 3

- TensorFlow Slim

- Sliding Window (available at GitHub)

Downloading the Project

To get started, clone the project to your local environment:

bash

$ git clone https://github.com/dronefreak/human-action-classification.git

$ cd human-action-classification

Before running any scripts, make sure to check the dependency tree within your environment for compatibility.

Pose Estimation and Action Classification

You’re now ready to perform pose estimation and action classification. You can process a single image or utilize your webcam for real-time analysis. Here’s how:

For a Single Image

$ python3 run_image.py --image=1.jpg

Just remember to change the address variable in the script to suit your local setup. This enhances the script’s performance and compatibility.

For Webcam Input

$ python3 run_webcam.py

During tests, the average inference time for a single image on a decent configuration was around 1.423 seconds.

Training on Custom Datasets

For those looking to classify specific poses or scenes, you will need to set up a properly structured dataset. Here’s a recommended directory structure:

training

├── README.md

├── Action-1

│ ├── file011.jpg

│ └── file012.jpg

└── Action-2

├── file021.jpg

└── file022.jpg

Once your dataset is organized, you can begin training with the following command:

$ python3 scripts/retrain.py --model_dir=tf_files/retrained_graph.pb --output_labels=tf_files/retrained_labels.txt --image_dir=training

Make sure to explore the options available in the retrain script. You can tweak parameters like learning rate and training steps as needed.

Common Errors and Troubleshooting

If you encounter issues such as “No module named _pafprocess”, you will need to build the C++ library for pafprocess. Here’s how you can do it:

$ cd tf_pose/pafprocess

$ swig -python -c++ pafprocess.i

$ python setup.py build_ext --inplace

For further assistance, consider exploring online resources or communities dedicated to AI projects. For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

In Summary: An Analogy

Think of human action classification and pose estimation as teaching a child to recognize different activities. Initially, you show them pictures of kids sitting or standing, and they learn to classify these actions. If you introduce various scenes, like playgrounds or classrooms, the child learns to correlate the actions with their surroundings. Just like that, by training the models on provided datasets, we enable machines to understand human behavior in context.