Welcome to the world of image search powered by OpenAI’s CLIP (Contrastive Language-Image Pre-Training) model! This technology bridges the gap between images and text, allowing you to retrieve images based on your queries, whether they are text or image inputs. In this blog, we’ll explore how to set up your own image search engine effortlessly. Let’s dive in!

What is CLIP?

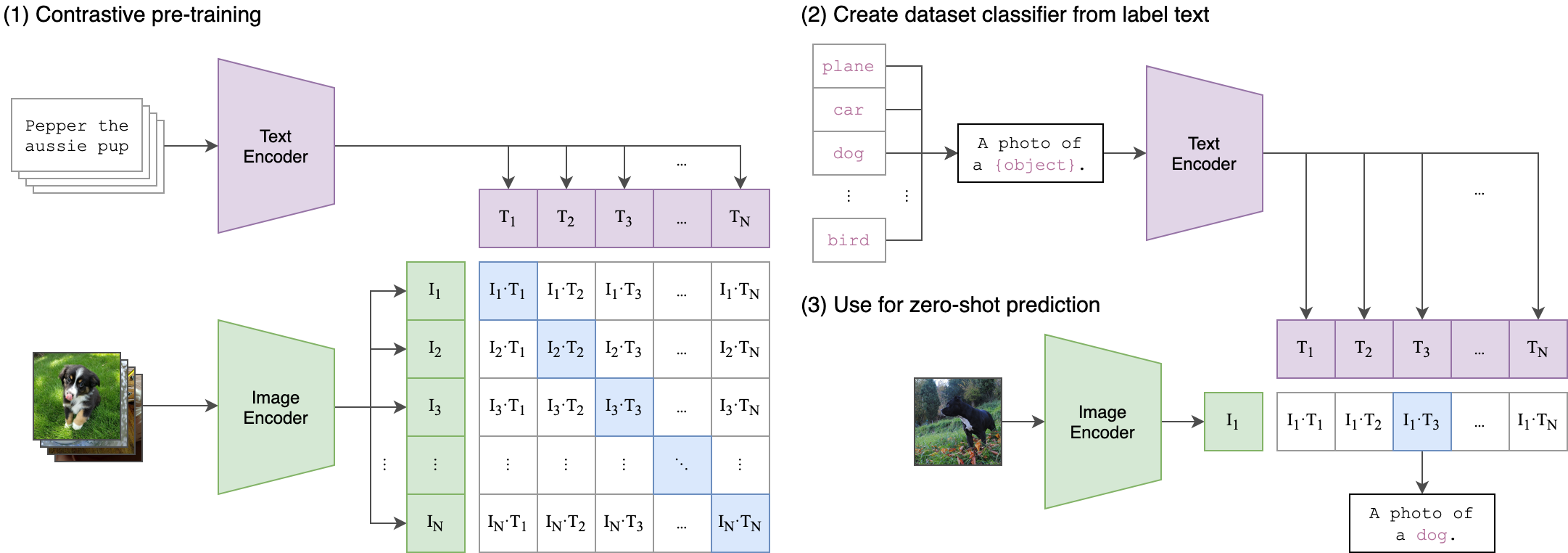

CLIP is a neural network designed to learn from a diverse set of image and text pairs. It maps both images and text into the same latent space, enabling us to evaluate their similarity. Imagine a library where both images and books are placed side by side based on their relevance to a given topic; that’s how CLIP operates in the digital world!

Setting Up Your Image Search Engine

Follow these steps to create your own image search engine using the CLIP model:

Step 1: Install Dependencies

pip install -e . --no-cache-dirStep 2: Download the Unsplash Dataset

Use the command below to download the Unsplash dataset that contains a treasure trove of images.

python scripts/download_unsplash.py --image_width=480 --threads_count=32This script will extract the image metadata and download the images. Do note that some downloads may fail, so if issues arise, refer to the known issues.

Step 3: Create the Index and Upload Image Feature Vectors to Elasticsearch

The next step is to process the images through the pretrained CLIP model and upload them to Elasticsearch. Run the following command:

python scripts/ingest_data.pyStep 4: Build Docker Image for AWS Lambda

Now, it’s time to build your Docker image to deploy on AWS Lambda.

docker build --build-arg AWS_ACCESS_KEY_ID=YOUR_AWS_ACCESS_KEY_ID \

--build-arg AWS_SECRET_ACCESS_KEY=YOUR_AWS_SECRET_ACCESS_KEY \

--tag clip-image-search \

--file server/Dockerfile .Next, run the Docker container with the following command:

docker run -p 9000:8080 -it --rm clip-image-searchStep 5: Test the Container

Once the container is running, you can test it using a POST request:

curl -XPOST http://localhost:9000/2015-03-31/functions/function/invocations -d '{"query": "two dogs", "input_type": "text"}'Step 6: Run Streamlit App

Finally, to interact with your image search engine, run the Streamlit app:

streamlit run streamlit_app.pyPossible Improvements

- Consider using a dimension reduction technique like PCA to minimize storage costs and enhance query speeds.

- To scale to billions of images, think about binarizing the features.

Troubleshooting

If you encounter any issues during the setup or execution of your image search engine, here are a few troubleshooting ideas:

- Ensure that your AWS credentials are correctly set.

- Check for any error messages during the image download process; retry if necessary.

- Consult the logs generated by Docker to identify any container-related issues.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Conclusion

Now that you’ve set up your image search engine using CLIP, you can explore the fascinating world of image retrieval! Use it to search for images with text or other images as queries and unlock the potential of powerful AI technology. Happy searching!