Welcome to the exciting world of Nomic-BERT-2048! This advanced BERT model boasts an impressive max sequence length of 2048, trained on reliable datasets like Wikipedia and BookCorpus. If you’re eager to harness this groundbreaking model for your machine learning projects, you’re in the right place. In this guide, we’ll walk through how to use the model effectively. Let’s dive into the details!

Understanding Nomic-BERT-2048

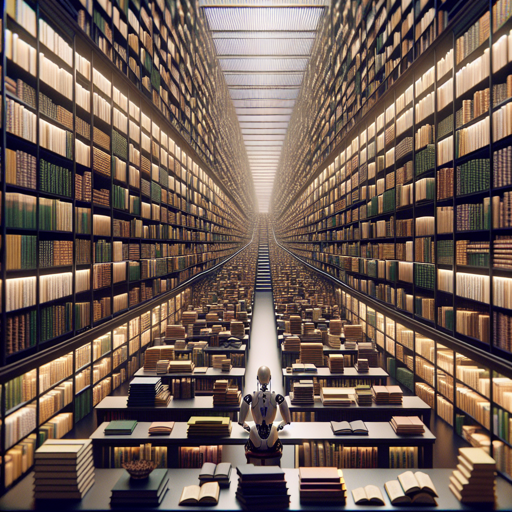

Think of Nomic-BERT-2048 as a highly skilled librarian with a massive library (our training data) who not only retains vast knowledge but also has an exceptional ability to reference longer texts (2048 tokens). Traditional models, like the classic Bert, are limited to only referencing shorter texts, while Nomic-BERT can pull from more extensive information. This remarkable capacity allows Nomic-BERT-2048 to facilitate intricate understanding in language tasks, making it a powerful tool.

Pretraining Data

Nomic-BERT was trained using:

The model processes sequences by tokenizing them into chunks of 2048 tokens. If a document is too short, it will combine it with another to fill the length. If it exceeds 2048 tokens, it will split the text into separate documents.

Using Nomic-BERT-2048

Ready to use this impressive model? Here’s how to get started!

Step 1: Importing Required Libraries

You’ll need to import the libraries from the Hugging Face Transformers to utilize the pre-trained Nomic-BERT effectively:

from transformers import AutoModelForMaskedLM, AutoConfig, AutoTokenizer, pipelineStep 2: Tokenizing and Configuring the Model

You can load the tokenizer and configuration like this:

tokenizer = AutoTokenizer.from_pretrained('bert-base-uncased') # `nomic-bert-2048` uses the standard BERT tokenizer

config = AutoConfig.from_pretrained('nomic-ai/nomic-bert-2048', trust_remote_code=True) # config needs to be passed inStep 3: Loading the Pretrained Model

Now, load the model in the following manner:

model = AutoModelForMaskedLM.from_pretrained('nomic-ai/nomic-bert-2048', config=config, trust_remote_code=True)Step 4: Performing Masked Language Modeling

To utilize this model for masked language modeling, you can use:

classifier = pipeline('fill-mask', model=model, tokenizer=tokenizer, device="cpu")

print(classifier("I [MASK] to the store yesterday."))Step 5: Fine-tuning for Sequence Classification

If you wish to fine-tune it for a sequence classification task, here’s how:

from transformers import AutoConfig, AutoModelForSequenceClassification

model_path = "nomic-ai/nomic-bert-2048"

config = AutoConfig.from_pretrained(model_path, trust_remote_code=True) # strict needs to be false since we're initializing new params

model = AutoModelForSequenceClassification.from_pretrained(model_path, config=config, trust_remote_code=True, strict=False)Troubleshooting Tips

If you encounter any issues while using Nomic-BERT-2048, here are some troubleshooting ideas to assist you:

- Ensure that all dependencies and libraries are correctly installed and updated.

- Check that your environment supports the model configuration; sometimes, outdated packages can cause conflicts.

- For memory-related issues, consider optimizing data batch sizes.

- Don’t hesitate to consult the documentation for detailed solutions and guidance.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Mastering Nomic-BERT-2048 opens the door to a realm of possibilities, allowing for sophisticated understanding and engagement with language. As you experiment with this powerful model, remember that these advancements are vital for the future of AI, as they enhance the effectiveness of our solutions.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.