Update 22-12-2021:

Added support for PyTorch Lightning 1.5.6 version and cleaned up the code.

A collection of Variational AutoEncoders (VAEs) implemented in PyTorch with a focus on reproducibility. The aim of this project is to provide a quick and simple working example for many of the cool VAE models out there. All the models are trained on the CelebA dataset for consistency and comparison.

The architecture of all the models is kept as similar as possible with the same layers, except for cases where the original paper necessitates a radically different architecture (e.g., VQ VAE uses Residual layers and no Batch-Norm, unlike other models).

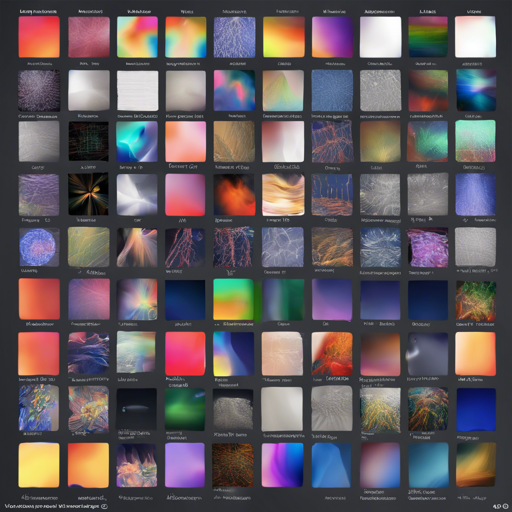

Here are the results of each model.

Requirements

- Python = 3.5

- PyTorch = 1.3

- PyTorch Lightning = 0.6.0 (GitHub Repo)

- CUDA enabled computing device

Installation

To set up the project on your local machine, follow these steps:

$ git clone https://github.com/AntixK/PyTorch-VAE

$ cd PyTorch-VAE

$ pip install -r requirements.txtUsage

To run the VAE, use the following commands:

$ cd PyTorch-VAE

$ python run.py -c configs/config-file-name.yamlConfig File Template

model_params:

name: name of VAE model

in_channels: 3

latent_dim: .

# Other parameters required by the model

data_params:

data_path: path to the celebA dataset

train_batch_size: 64 # Better to have a square number

val_batch_size: 64

patch_size: 64 # Models are designed to work for this size

num_workers: 4

exp_params:

manual_seed: 1265

LR: 0.005

weight_decay: .

# Other arguments required for training, like scheduler etc.

trainer_params:

gpus: 1

max_epochs: 100

gradient_clip_val: 1.5

logging_params:

save_dir: logs

name: experiment nameView TensorBoard Logs

Run the following command to visualize your training results:

$ cd logs/experiment_name/version_you_want

$ tensorboard --logdir .Important Note

The default dataset is CelebA; however, issues have been reported with downloading the dataset from Google Drive due to some file structure changes. It is recommended to download the file directly from Google Drive and extract it to the path of your choice. The default path assumed in the config files is Data/celeba/img_align_celeba, but you can change it according to your preference.

Results

| Model | Paper | Reconstruction | Samples |

|---|---|---|---|

| VAE (Code, Config) | Link |  |

|

Troubleshooting

If you encounter issues during setup or execution, try the following:

- Ensure that your Python version is compatible. The project requires Python 3.5.

- Make sure all dependencies are installed by checking the

requirements.txtfile. - If you have issues with CUDA, confirm that your device has CUDA enabled and is set up correctly.

- In case of configuration errors, verify paths in your YAML config file and correct them accordingly.

- If dataset download issues persist, follow the manual download instructions as outlined above.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.