The landscape of autonomous driving technology has been evolving rapidly, with various companies vying for dominance in a field that promises to revolutionize how we think about travel. However, as advancements are made, they’re often accompanied by scrutiny and debate. A recent full-page advertisement in the New York Times has sparked considerable discussion around Tesla’s Full Self-Driving (FSD) software, raising questions about the safety and reliability of such technology. This blog explores the implications of this ongoing controversy and sheds light on the need for rigorous standards within the ever-advancing world of automated driving.

The Dawn Project’s Bold Claims

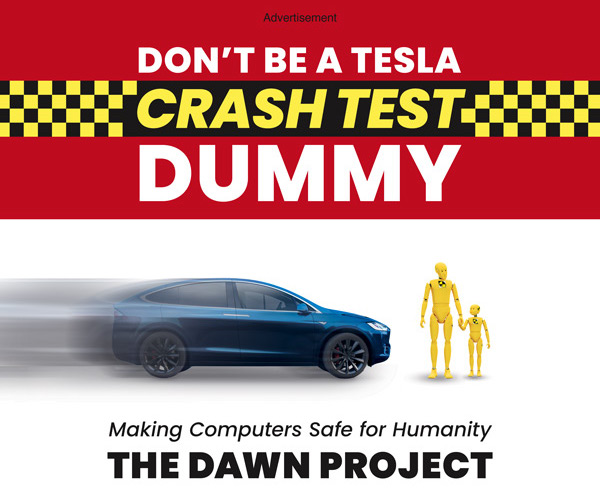

The advertisement, orchestrated by The Dawn Project—a newly formed organization advocating for the ban of unsafe software in critical systems—claims Tesla’s FSD is “the worst software ever sold by a Fortune 500 company.” The organization daringly offered $10,000 to anyone who could mention another product with similar issues. This bold claim gets to the heart of concerns that many have about Tesla’s approach to autonomous driving.

Dan O’Dowd, the founder of The Dawn Project, also leads Green Hill Software, a company specializing in real-time operating systems for safety-critical applications. His dual role inevitably raises eyebrows about potential bias. Nonetheless, his organization conducted an analysis of several FSD beta videos, alleging that the software made critical errors at an alarming rate—one every eight minutes, to be precise. This assertion, while certainly sensational, establishes a basis for further conversation about the safety protocols surrounding self-driving technology.

Spotlight on Regulation and Accountability

The rising concerns have prompted governmental entities, like the California Department of Motor Vehicles (DMV), to reconsider their stance on Tesla’s testing practices. Other companies in the sector, such as Waymo, face strict regulations for their testing procedures. Critics argue that Tesla’s method—using consumers instead of professional drivers—could lead to serious public safety risks. With federal regulators beginning to scrutinize Tesla more closely, the question arises: Should there be stricter regulations governing autonomous vehicle testing?

Real-World Impact and Consumer Experiences

Recent testimonials from Tesla owners reveal a mixed bag of experiences with the FSD system. Some users lauded the advancements made in software version updates, while others expressed significant safety concerns. Online forums and social media platforms, like the Tesla Motors subreddit, are rife with anecdotes of erratic driving behavior—such as hesitations at empty intersections or unsafe lane changes—raising the alarm bells about the software’s reliability.

This dichotomy underscores the importance of consumer feedback in shaping the future of driving technology. With each update, it becomes increasingly crucial for Tesla to not only listen to its users but also to actively address their concerns in a transparent manner.

The Consequences of Inadequate Standards

The pivotal issue is whether Tesla’s FSD should be allowed to operate in public spaces without stringent regulations in place. The Dawn Project’s ad poignantly remarks, “We did not sign up for our families to be crash test dummies for thousands of Tesla cars being driven on public roads.” This sentiment encapsulates a broader anxiety surrounding the landscape of autonomous driving, where unregulated testing could pose extreme risks to drivers and pedestrians alike. It draws attention to the glaring lack of comprehensive standards for evaluating the safety of software meant to manage critical driving functions.

Conclusion: The Path Forward for Autonomous Driving

The controversy surrounding Tesla’s Full Self-Driving software highlights the pressing need for enhanced regulation and accountability in the realm of autonomous vehicles. As technology continues to advance at a breakneck pace, stakeholders—including companies, regulators, and consumers—must cooperate to ensure that safety does not become an afterthought in the race for innovation. Clear guidelines and rigorous testing will be essential to fostering public trust in automated systems and ultimately paving the way for a safer driving future.

At **[fxis.ai](https://fxis.ai)**, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

For more insights, updates, or to collaborate on AI development projects, stay connected with **[fxis.ai](https://fxis.ai)**.