In 2018, the tragic incident where an autonomous vehicle operated by Uber fatally struck a pedestrian raised urgent questions about the effectiveness of self-driving technologies. As discussions ensued, Mobileye, a leader in advanced driver assistance systems, highlighted the potential of its computer vision software by analyzing footage from the accident. The analysis revealed that the pedestrian could have been detected a full second before the impact, igniting debates about the failures of Uber’s system and the future of autonomous driving safety.

The Evidence of Failure

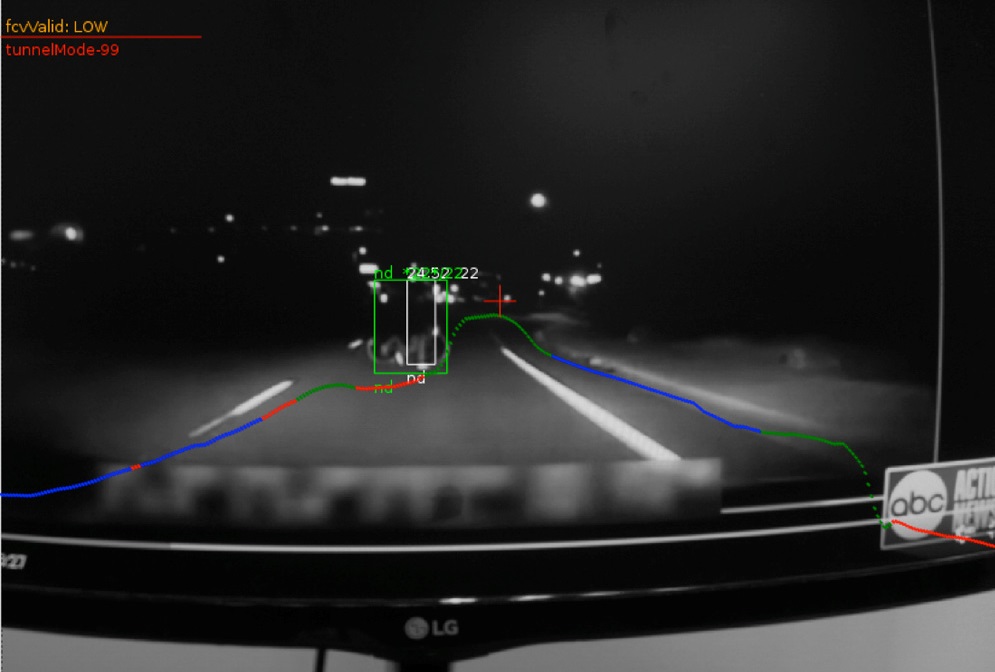

Mobileye’s CEO Amnon Shashua showcased how their computer vision technology effectively identified the pedestrian, Elaine Herzberg, and her bike from the degraded footage. This revelation served not as a boast about superiority, but as a sobering reminder of the responsibilities that come with entrusting machines with our safety.

- Inadequate Response: The analysis defined one glaring issue: the Uber system did not apply the brakes until after the collision occurred, missing the critical window of opportunity to prevent a tragedy.

- Technology at Hand: Many advanced self-driving vehicles are equipped with an array of sensors including radar and lidar, yet these layers of technology failed to recognize an imminent hazard.

- Learning from Mistakes: As we move forward, the reliance on robust computer vision algorithms highlights the need for continuous improvement and learning from prior failures in the AI domain.

The Technical Hurdles Ahead

While Mobileye’s demonstration showcased its ability to detect obstacles even in grainy videos, the reality is that technology must operate in real-time and under varying conditions. Many argue that such academic showcases only scratch the surface of what is required:

- Real-World Data: Self-driving systems must be rigorously tested with diverse real-world scenarios to enhance their reliability, far beyond simulated environments or post-incident analyses.

- Human-like Decision Making: The transition from recognizing an object to taking appropriate safety measures requires a leap in AI reasoning capabilities, mimicking human reflexes in split-second decisions.

- Collaborative Efforts: The collaboration between companies, regulators, and researchers is vital in establishing a framework that prioritizes safety in AI development and deployment.

A Call to Action for the Industry

This incident serves as a stark reminder that the road to fully autonomous vehicles is riddled with challenges. Manufacturers must not only focus on technological advances but also integrate thorough safety protocols, concurrent testing with real-world conditions, and transparent communication about system capabilities and limitations.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Conclusion

The analysis provided by Mobileye paints a poignant picture of both the potential and pitfalls of AI in autonomous vehicles. It underscores the necessity for robust sensor systems, real-time decision-making algorithms, and a commitment to learning from past incidents. As we advance in this complex field, it is imperative that we foster collaboration among stakeholders and prioritize safety to build public trust in self-driving technology. For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.