As robotics continue to evolve, researchers are pushing the boundaries of what machines can perceive. Traditionally, robots have relied heavily on visual and tactile senses for interaction with their environment. However, the innovative work being conducted at Carnegie Mellon University (CMU) is introducing an exciting new dimension to robotic perception: sound. By exploring auditory feedback, researchers aim to significantly enhance the way robots interpret the world around them.

Robotics Meets Acoustics

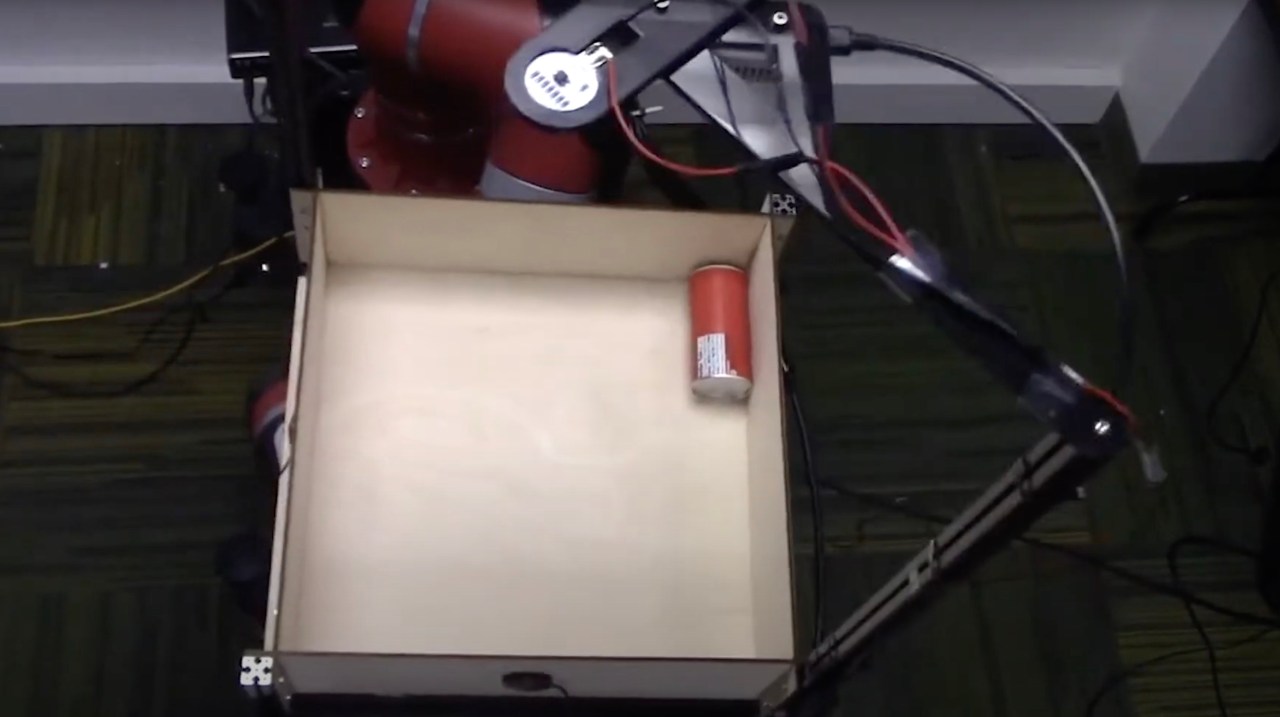

At the forefront of this research is an experiment involving Rethink Robotics’ Sawyer robot. This initiative, aptly named the “Tilt-Bot,” has undertaken a unique challenge: identifying various objects based on the sounds they produce during interaction. During the experiment, the Tilt-Bot was tasked with handling a diverse selection of 60 objects, ranging from everyday items like tennis balls and apples to tools like screwdrivers and wrenches.

With 15,000 interaction data points recorded, the project achieved a remarkable accuracy of 76% in object identification. What’s particularly interesting is how the robot distinguished objects based on the subtle sound differences they make. For instance, it could detect the difference between a metal screwdriver and a wrench, highlighting the robot’s acute ability to discern material properties merely through sound.

Learning from Failures

Oliver Kroemer, an assistant professor at CMU, commented on the implications of their findings. He noted that while the robot occasionally failed to identify certain objects, its failures were often predictable and understandable. For example, it would typically struggle with similar shapes, such as a block versus a cup. This predictability indicates that the robot has not only learned to identify objects but can also recognize its own limitations, a crucial aspect in the development of artificial intelligence.

Future Potential: Sound as a Sensing Tool

What does this mean for the future of robotics? Researchers believe that incorporating auditory processing into robotic sensors could be transformational. Imagine a scenario where robots could use a “cane” to tap an object, further enhancing their understanding of its properties based solely on the sound of the impact. This could lead to robots being more effective in tasks that require a deeper understanding of material properties and object interactions in dynamic settings.

Implications for Robotics in Everyday Life

The potential applications for sound-enhanced robots are vast. For instance, such technology could revolutionize how robots interact with humans and avoid obstacles in real-world environments. Household robots might use sound to discern which items are fragile and handle them with care. In sectors like manufacturing, robots could identify faulty components based on the sounds they make during operation, enhancing quality control processes.

Conclusion: A Symphony of Innovation

The exploration of sound for robotic perception represents a bold step forward in artificial intelligence. By marrying auditory inputs with existing sensory capabilities, researchers at Carnegie Mellon University are paving the way for smarter, more intuitive machines. These advancements are not just a technical curiosity; they could transform our interactions with robots as they become more integrated into our daily lives.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.