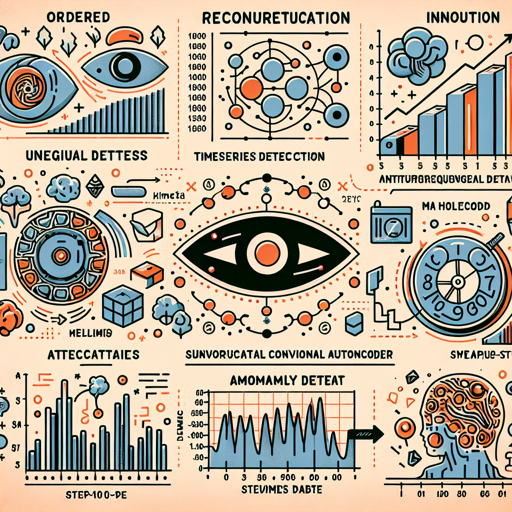

In the fascinating world of machine learning, timeseries anomaly detection has emerged as a crucial technique, particularly for identifying unusual patterns in sequential data. This blog post will guide you through the process of implementing timeseries anomaly detection using an Autoencoder with Keras.

Background and Datasets

This tutorial will demonstrate how to use a reconstruction convolutional Autoencoder model to detect anomalies in timeseries data. We’ll utilize the Numenta Anomaly Benchmark (NAB) dataset, which offers a rich supply of artificial timeseries data containing labeled anomalous periods of behavior. The data consists of ordered, timestamped, single-valued metrics, enabling effective anomaly detection.

Getting Started

To implement the model, follow these steps:

- Install necessary libraries like Keras and TensorFlow.

- Download the NAB dataset and prepare your timeseries data.

- Set the training hyperparameters as outlined below.

Training Hyperparameters

The following hyperparameters were used during training:

- Optimizer:

- Name: Adam

- Learning Rate: 0.001

- Decay: 0.0

- Beta 1: 0.9

- Beta 2: 0.999

- Epsilon: 1e-07

- AMSGrad: False

- Training Precision: float32

Understanding the Training Metrics

The training involves monitoring loss across several epochs. Here’s a look at the training and validation losses:

Epochs Train Loss Validation Loss

1 0.011 0.014

2 0.011 0.015

3 0.010 0.012

4 0.010 0.013

…

29 0.006 0.008

As we can see, both the training and validation losses decrease over time, indicating that the model is learning effectively to reconstruct the timeseries data.

Model Summary and Visualization

Once the model has been trained, it is essential to visualize its performance. Typically, this includes plotting the reconstructed vs original data, which helps to identify the anomalies visually.

Troubleshooting Tips

While implementing this model, you may encounter a few common issues:

- Resource Limitations: Training might require significant computational power. Consider optimizing your code or using cloud resources.

- Data Quality: Ensure your dataset is properly preprocessed to minimize noise and missing values that could interfere with training.

- Overfitting: If the model performs well on training data but poorly on validation data, consider reducing the model complexity or increasing regularization.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.