Welcome to the fascinating world of Natural Language Processing (NLP), where complex tasks are distilled into simpler forms, creating models that are as efficient as they are effective. In this blog, we will explore TinyBERT, a compact yet powerful version of BERT designed for natural language understanding, making it 7.5x smaller and 9.4x faster than its predecessor.

Why TinyBERT?

BERT (Bidirectional Encoder Representations from Transformers) opened new doors in NLP with its impressive performance. However, it comes with a hefty size and computational cost. Enter TinyBERT, a clever distillation of BERT that retains performance while enhancing speed and minimizing resource requirements.

The Distillation Process

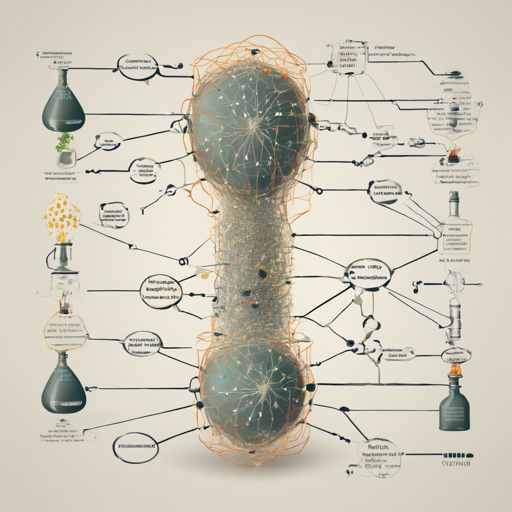

Imagine you have a massive, ancient library of knowledge—this is BERT. You decide to create a concise, easy-to-navigate guide for everyday users—this is TinyBERT. The distillation process involves a teacher-student relationship where the original BERT acts as the teacher without any fine-tuning, imparting its knowledge to TinyBERT through a large-scale text corpus.

- **Teacher Model:** The original BERT-base serves as the guidance source.

- **Learning Data:** A broad text corpus is utilized to help TinyBERT learn from the general domain.

- **Two Stages:** Distillation occurs during both pre-training and task-specific learning, ensuring TinyBERT starts with a solid foundation.

How to Use TinyBERT

Using TinyBERT for your programming tasks is a breeze. Here’s a simplified approach:

- Clone the TinyBERT repository from Huawei Noah’s model repository: TinyBERT Model Repository.

- Install the necessary libraries (e.g., PyTorch, Transformers) to start leveraging TinyBERT in your projects.

- Load the TinyBERT model and tokenizer in your Python environment, ready for natural language tasks.

- Utilize the model for your specific NLP problem, adjusting parameters as needed for optimal results.

Troubleshooting Tips

If you encounter any issues while working with TinyBERT, here are some troubleshooting ideas:

- **Installation Problems:** Ensure that all required libraries are correctly installed and compatible with your Python version.

- **Performance Issues:** Review your data preprocessing steps; ensure the text input is compatible with TinyBERT’s requirements.

- **Model Load Failures:** Verify the model path and check that you have an active internet connection if loading from a repository.

- Still need help? For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

TinyBERT exemplifies the beauty of knowledge distillation in AI, striking a balance between efficiency and performance in NLP tasks. Whether you are handling a simple text classification or more complex sentiment analysis, TinyBERT offers a robust solution.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Further Reading

If you’re intrigued by the concepts behind TinyBERT, take a look at the original paper: TinyBERT: Distilling BERT for Natural Language Understanding.

By understanding and utilizing such models, we can embark on a journey of transforming how machines comprehend human language.