Artificial intelligence is continually evolving, with language models playing a crucial role in understanding human language nuances. One such innovation is the SGPT-125M model, which excels in sentence similarity tasks. This article will guide you through the usage, training, and evaluation results of this remarkable model.

Usage of SGPT-125M

To implement the SGPT-125M model in your projects, follow the usage instructions available in the official repository on GitHub: SGPT GitHub Repository.

Evaluation Results

The performance of the SGPT-125M model is thoroughly detailed in the research paper that can be referenced here: Evaluation Paper. This document provides insights into the effectiveness of the model in various tasks.

Training Overview

Training the SGPT-125M model involves several key components that ensure its functionality:

- DataLoader: It utilizes the

sentence_transformers.datasets.NoDuplicatesDataLoader.NoDuplicatesDataLoaderwith a dataset length of 8807 and a batch size of 64. - Loss Function: The model employs

sentence_transformers.losses.MultipleNegativesRankingLosswith specific parameters like scale and cosine similarity. - Fit Method Parameters: Key parameters include

epochs: 1evaluation_steps: 880max_grad_norm: 1optimizer_class: class transformers.optimization.AdamWweight_decay: 0.01

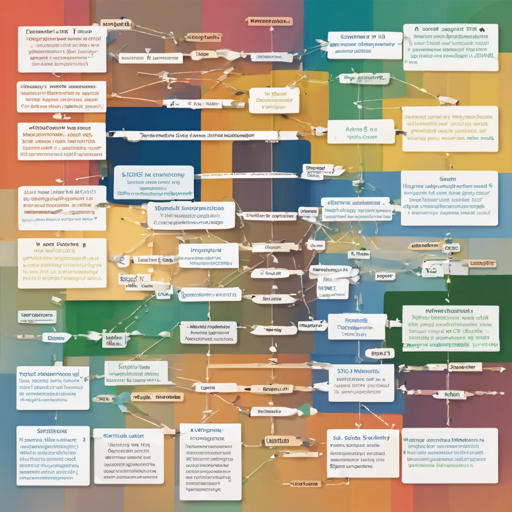

Understanding the Model Architecture

The architecture of the SGPT-125M model can be viewed as an intricate web of interconnected elements, much like a multi-layered cake. Each layer adds depth and richness to the final result, which translates into enhanced language understanding.

Here’s a breakdown of the architecture:

- Transformer Layer: This can be likened to the base layer of the cake, which transforms input sequences into understandability through the GPTNeoModel.

- Pooling Mechanism: Acts like the frosting, smoothing out the raw embeddings by taking averages, enhancing the overall flavor (substance) of the model’s understanding.

- Dense Layers: These represent additional layers of cake that add complexity and improve the model’s ability to encode language nuances through several activation transformations.

Troubleshooting and Helpful Tips

Should you encounter any issues while working with the SGPT-125M model, here are some troubleshooting ideas to help you navigate through common challenges:

- Problem with DataLoader: Ensure that the dataset is preprocessed correctly, eliminating duplicates and maintaining the required structure.

- Training Errors: Verify that the hyperparameters are set according to your dataset’s characteristics and experiment with adjustments as needed.

- Model Performance: If results aren’t meeting expectations, revisiting the loss function or adjustments in the training epochs can produce better outcomes.

- For further insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions.

Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.