The MICCAI 2017 Robotic Instrument Segmentation Sub-Challenge focused on advancing the field of robotic-assisted surgeries by employing deep learning for semantic segmentation of surgical instruments. In this article, we will explore the winning solution for this challenge, the methodologies involved, and how to effectively implement them for similar projects.

Overview of the Challenge

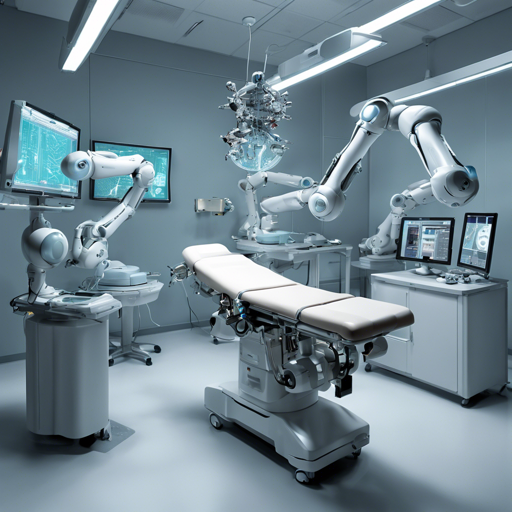

The segmentation of robotic instruments is crucial in the realm of robot-assisted surgery, as accurate detection of instruments’ positions is necessary for effective tracking and pose estimation. The task involves pixel-wise segmentation where each pixel in an image is classified as either an instrument or background, ultimately leading to improved surgical outcome predictions based on video feeds from these scenarios.

Our Winning Solution

The winning solution was based on the U-Net architecture and included improvements through advanced neural networks such as LinkNet and TernausNet. The methodology allowed for superior performance in binary and multi-class segmentation tasks of robotic instruments.

Methodology Explanation with Analogy

Think of our approach as a chef preparing a detailed gourmet meal. The U-Net serves as the main recipe structure, providing a solid foundation. However, to enhance the dish, the chef introduces additional flavors from LinkNet and TernausNet, which act like spices that improve the overall taste and presentation. Each ingredient (network architecture) contributes to the final product, making the robot more adept at distinguishing between different surgical instruments in a video feed.

Data Used

The training data consisted of 225-frame sequences from stereo camera images captured during porcine procedures, with high resolution (1920×1080 pixels). Each frame was hand-labeled, identifying the various instrument types used. This effort cultivated a rich dataset vital for training our models accurately.

Training and Implementation

Training the models involved computing the Jaccard index for evaluation, which measures the performance of model predictions. The strategy included both binary and multi-class segmentation, which was executed through a series of Python scripts. Here’s a simplified breakdown of the training steps:

- Data Preprocessing: Preparation of cropped images from the original videos.

- Model Training: Leveraging various neural networks with specified parameters.

- Mask Generation: Producing segmentation masks from input images.

- Evaluation: Assessing model performance through calculated metrics.

Troubleshooting Ideas

If you encounter issues while implementing this solution, consider the following troubleshooting tips:

- Ensure all dependencies are properly installed according to the requirements specified in the README.

- Check the structure of your dataset to ensure it aligns with the expected formats for training and testing.

- Adjust learning rates and batch sizes if you face convergence issues during training.

- Utilize the provided bash scripts for consistent training across multiple folds and configurations.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Results Achieved

The implementation of TernausNet led to impressive results, with the best performance in binary segmentation achieving an Intersection over Union (IoU) of 0.836, while multi-class segmentation had results that, despite being less optimistic, still outperformed previous efforts. These findings are a testament to the effectiveness of the proposed methodologies.

Further Steps for Improvement

Enhancements can be made by:

- Incorporating additional data augmentation techniques during training.

- Extending training durations to improve model support.

- Implementing techniques like cyclic learning rates or test-time augmentation for better prediction accuracy.

Demo and Utilizing Our Models

To start utilizing our models efficiently, refer to the demo example provided. It serves as a practical way to interact with the implemented methodologies.

Conclusion

The advances made in robotic instrument segmentation during MICCAI 2017 underscore the potential of deep learning in enhancing surgical precision. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.