The world of language models is ever-evolving, and one of the latest buzzwords making waves is “MoEification.” But what does this mean for developers and AI enthusiasts? In this article, we will explore how MoEification can slice through traditional dense language models, presenting an innovative approach to utilizing multiple Expert Models (MLP layers). Let’s unravel the details, shall we?

What is MoEification?

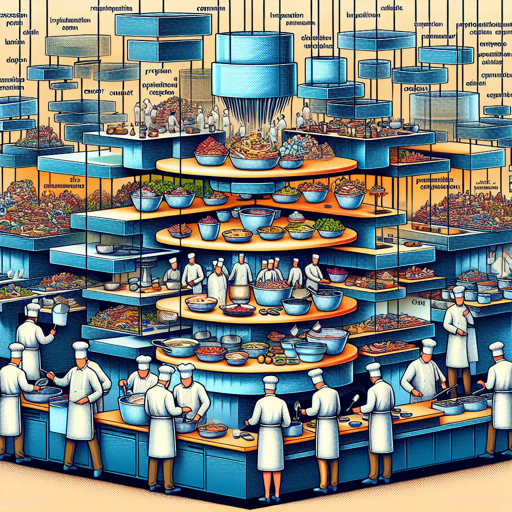

Imagine a busy restaurant with many chefs (experts) working in different kitchens (layers) to prepare a variety of dishes (outputs). In the context of a language model, MoEification allows us to slice the individual MLP layers into parts, letting each part function as an expert in a specific area of language processing. Here’s how it works:

- **Expert Count:** You divide the MLP projections into a specified number of experts, say, 8.

- **Parameter Adjustment:** You amplify the values of parameters to ensure that when combined, they produce an output equivalent to that of a traditional layer. Think of this as adding ingredients in proportion to maintain the taste of the dish.

- **Equal Initialization:** By initializing router layers with zeroes, you ensure that each expert starts on an equal footing, free from biases that might skew their performance.

The result? When all 8 experts are activated, they work coherently, delivering outputs that are logically sound. However, with fewer experts (like 4), coherence tends to diminish, resembling a dish where not all chefs are involved in the preparation.

# Example of MoEification in code

class MoELayer:

def __init__(self, num_experts):

self.num_experts = num_experts

self.router_layers = initialize_layers(0, num_experts)

def forward(self, x):

# Adjust parameters to match the number of experts

x_adjusted = adjust_parameters(x, self.num_experts)

return x_adjusted

Why Explore Variable Expert Counts?

The idea behind further training models to naturally balance the number of active experts piques my interest. If successful, we can essentially teach models to use less computation for simple and predictable tokens, while deploying more resources for complex ones, akin to a chef deciding which tools are necessary for each dish.

Troubleshooting MoEification

If you’re diving into the MoEification waters and encounter challenges, here are a few troubleshooting tips:

- **Model Coherence Issues:** If the output lacks coherence when reducing the number of experts, double-check your parameter adjustments for accuracy.

- **Initialization Problems:** Ensure that your router layers are indeed initialized to zero. Otherwise, biases may skew your models’ performance, much like an unbalanced recipe.

- **Performance Metrics:** Keep an eye on output metrics when shifting the number of experts. You may need to fine-tune how and when experts are utilized.

For more insights, updates, or to collaborate on AI development projects, stay connected with **fxis.ai**.

A Final Word

At **fxis.ai**, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

So, whether you’re a seasoned developer or a curious learner, MoEification presents a fascinating avenue to create smarter, more efficient language models. Let’s embrace the future of AI together!