XLM-RoBERTa is not just another language model; it’s like a polyglot genius, fluently understanding and processing 100 languages with ease. In this blog, we will guide you on how to leverage the capabilities of XLM-RoBERTa, along with troubleshooting tips for a smooth experience. Let’s dive into the world of multilingual AI!

What is XLM-RoBERTa?

XLM-RoBERTa is a large-sized multilingual model fine-tuned on 2.5TB of filtered CommonCrawl data that includes texts in a whopping 100 languages. Its core technology is based on the RoBERTa architecture, allowing it to learn a bidirectional representation of sentences through the Masked Language Modeling (MLM) approach.

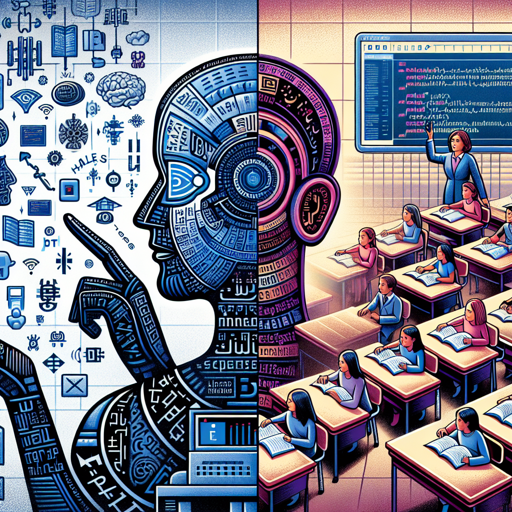

Understanding Masked Language Modeling (MLM): An Analogy

Imagine you’re in a classroom where a teacher gives you a sentence with some words missing, and your task is to fill those gaps. Just like in this classroom scenario, XLM-RoBERTa takes a sentence, randomly masks 15% of the words, and challenges itself to predict them. This process is akin to how it learns to understand the context of numerous languages:

- The student (XLM-RoBERTa) learns from the entire classroom (sentence) rather than just one word at a time.

- By piecing together the clues left in the sentence, the model builds a mental model (internal representation) of the language.

Thus, it becomes a master of inferring meanings across diverse contexts, enhancing its performance on downstream tasks.

How to Use XLM-RoBERTa

Masked Language Modeling

Below is how you can utilize XLM-RoBERTa for masked language modeling with Python:

from transformers import pipeline

unmasker = pipeline('fill-mask', model='xlm-roberta-large')

unmasker("Hello I'm a model.") Getting Features of Text

To extract features from a text snippet using PyTorch, you can execute the following code:

from transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained('xlm-roberta-large')

model = AutoModelForMaskedLM.from_pretrained("xlm-roberta-large")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)Intended Uses & Limitations

XLM-RoBERTa is primarily designed for tasks such as:

- Sequence classification

- Token classification

- Question answering

However, for text generation tasks, it’s better to explore models like GPT2.

Troubleshooting Tips

While working with XLM-RoBERTa, you may encounter some common issues. Here’s how to tackle them:

- Model Not Found: Ensure you have the correct model name and check your internet connectivity.

- Environment Issues: Make sure you have the necessary libraries installed. Use

pip install transformersto get started. - Incompatible Versions: If you face errors, verify that your Transformers library is up-to-date.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.