In the world of natural language processing (NLP), the BERT (Bidirectional Encoder Representations from Transformers) model has revolutionized the way machines understand human language. This blog post aims to provide a friendly guide on how to harness the capabilities of the BERT large model (uncased) for various language-related tasks.

What is BERT?

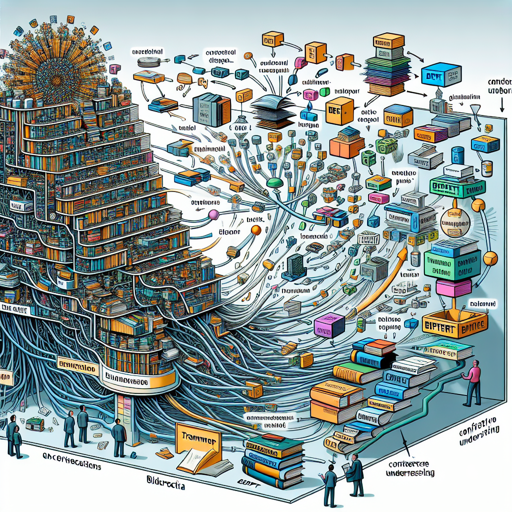

BERT is a groundbreaking transformer model pretrained on a vast collection of English texts, including Wikipedia and the BookCorpus. Its innovative self-supervised training methodology allows it to understand context and the relationships between words in a way that traditional methods, such as RNNs and even autoregressive models like GPT, could not.

Understanding BERT’s Mechanism

Imagine the BERT model as a skilled detective examining sentences. When presented with a case—a sentence with some words missing (masked)—the detective retrieves clues (context) from surrounding sentences to deduce the masked words. There are two primary strategies the detective employs:

- Masked Language Modeling (MLM): By randomly hiding some words (say, 15%) in a sentence, BERT predicts the missing pieces using the context of the remaining words.

- Next Sentence Prediction (NSP): BERT considers pairs of sentences and tries to determine if they are sequential or not, helping it grasp the flow of language.

How to Use BERT in Your Projects

Here’s a step-by-step guide to getting started with the BERT large model (uncased) using Python, with both PyTorch and TensorFlow.

Using BERT for Masked Language Modeling

from transformers import pipeline

unmasker = pipeline('fill-mask', model='bert-large-uncased')

result = unmasker("Hello I'm a [MASK] model.")

print(result)Extracting Features with BERT

To utilize BERT for feature extraction in PyTorch:

from transformers import BertTokenizer, BertModel

tokenizer = BertTokenizer.from_pretrained('bert-large-uncased')

model = BertModel.from_pretrained("bert-large-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)For TensorFlow, the process is similar:

from transformers import BertTokenizer, TFBertModel

tokenizer = BertTokenizer.from_pretrained('bert-large-uncased')

model = TFBertModel.from_pretrained("bert-large-uncased")

text = "Replace me by any text you'd like."

encoded_input = tokenizer(text, return_tensors='tf')

output = model(encoded_input)Troubleshooting Tips

While working with BERT, you might encounter a few bumps along the journey. Here are some troubleshooting ideas:

- Ensure all libraries are up-to-date: Compatibility issues can arise if you’re using an outdated version of the Hugging Face Transformers library. Regularly check for updates.

- Check model and tokenizer names: Make sure that you are using the correct names when loading the pretrained model and tokenizer.

- Memory issues: BERT models are large and can consume a lot of RAM. If you encounter memory errors, consider using a smaller model or adjusting batch sizes.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Limitations and Bias

Despite its remarkable capabilities, BERT is not without limitations. Because it learns from large datasets, it can propagate biases present in that data. For instance, it may associate specific roles with genders when making predictions.

Always be aware of this potential skew when interpreting results from BERT or any fine-tuned model.

Conclusion

Embarking on your journey with the BERT model can be both exciting and rewarding. As you dive deeper, keep in mind the power of context and relationships within language. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.