In the fast-paced world of artificial intelligence (AI) and machine learning, computational power is king. As organizations increasingly rely on data-driven models, the need for scalable, efficient processing solutions has never been more critical. Google is stepping up to the plate with its latest offering – the second- and third-generation Cloud TPU Pods. With their incredible capabilities, these powerful machines are poised to redefine how developers approach machine learning tasks. Let’s explore what makes these Pods a formidable tool in the AI landscape.

What Are TPU Pods?

Cloud TPU Pods (Tensor Processing Units) provide a supercomputing backbone that focuses on accelerating machine learning workloads. The latest v3 models come with over 1,000 custom TPUs and offer staggering processing power of up to 100 petaFLOPS. This computational capability places them among the top-ranking supercomputers in the world, albeit with an important caveat: their operations often prioritize lower numerical precision, which may affect performance metrics.

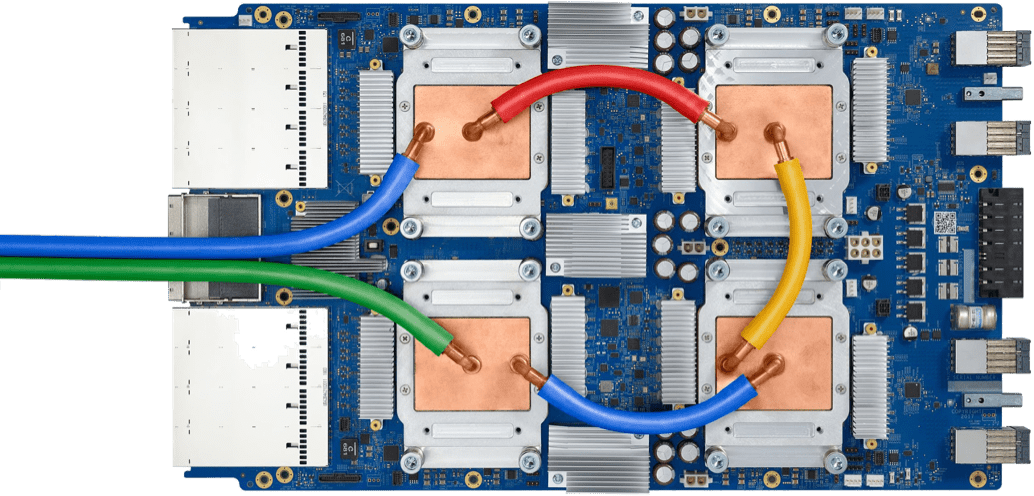

Liquid Cooling and Efficient Performance

One of the standout features of the v3 TPU Pods is their liquid-cooled design, enhancing performance while keeping energy consumption efficient. This cooling mechanism is critical for handling the heat generated during intensive computations, ensuring optimal functioning without overheating—a common concern with such powerful machinery. The introduction of this cooling technique signifies Google’s message: it’s not just about getting power; it’s about getting power sustainably and efficiently.

Scalability: Pods vs. Slices

Flexibility is a key component for developers looking to integrate TPU Pods into their workflow. Google has acknowledged this by allowing users to rent “slices” of the TPU Pods rather than the entire facility. This ensures that developers can optimize resource usage, particularly when working on smaller projects or when experimenting with new models. Whether you are in need of full Pod capabilities or just a fraction of that power, Google provides options tailored to meet diverse computational needs.

Speed Factor: Training Models in Minutes

Consider the speed in terms of model training: a standard ResNet-50 image classification model can be trained using the ImageNet dataset in just two minutes on the TPU v3. For comparison, using a v2 Pod—though still impressive—requires 11.3 minutes with 265 TPUs. If one opts for individual TPU use, that training time jumps dramatically to 302 minutes. This difference in timing highlights the enhancing productivity afforded by the newer generation of TPUs, allowing developers to prototype and iterate faster than ever before.

Use Cases: When and Why to Use TPU Pods

- Rapid Prototyping: If you’re looking to quickly develop and test new machine learning models, TPU Pods enable swift iterations, allowing you to explore a multitude of ideas effectively.

- Large Datasets: Organizations dealing with massive datasets can utilize the Pods to not just train but refine their models, ensuring higher accuracy and better results with labeled data.

- Deadline-Driven Projects: When time is of the essence, such as for time-sensitive research or product launches, using TPU Pods can drastically shorten the model training cycle.

Conclusion: Embracing the Future of AI

Google’s Cloud TPU Pods are more than a technological advancement; they’re a game-changer for developers and organizations navigating the complexities of AI and machine learning. With their powerful capabilities, flexible scalability, and exceptional speed, it’s clear why they represent a crucial asset for anyone serious about leveraging AI in today’s data-driven environment.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.