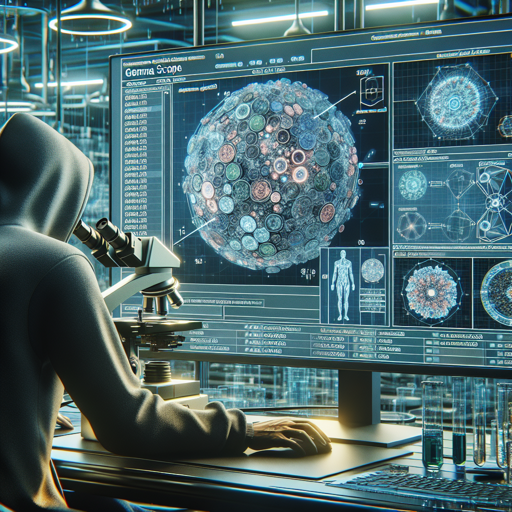

Welcome to our comprehensive guide on utilizing Gemma Scope, a powerful suite of sparse autoencoders designed to help you delve into the inner workings of machine learning models. With Gemma Scope, you’re not just observing; you’re dissecting models to understand their internal activations, similar to how scientists use microscopes to explore individual cells in biology.

What is Gemma Scope?

Gemma Scope is an open suite of sparse autoencoders (SAEs) tailored for the Gemma 2 9B and 2B models. Just as a microscope enables biologists to see finer details of life, Gemma Scope empowers researchers to break down complex model activations into understandable concepts. For a deeper dive, check out our landing page for more details on the whole suite.

Understanding `gemma-scope-2b-pt-att`

Within the Gemma Scope, we have specific sparse autoencoders, including:

- gemma-scope-: Refers to the entire Gemma Scope framework explained previously.

- 2b-pt-: This denotes that these SAEs were trained on the Gemma v2 2B base model.

- att: These SAEs are trained on the attention layer outputs, which occur before the final linear projection.

How to Use Gemma Scope

To effectively employ Gemma Scope in your work, follow these steps:

- Installation: Ensure that you have the necessary dependencies installed to run the Gemma Scope suite.

- Select the Autoencoder: Choose the appropriate SAE for your needs, such as `gemma-scope-2b-pt-att`.

- Run the Analysis: Using your chosen SAE, run your data through the autoencoder to gain insights into the model’s activations.

- Interpret Results: Analyze the output to understand the underlying concepts represented by the internal model activations.

Troubleshooting

If you encounter any issues while working with Gemma Scope, here are a few troubleshooting tips:

- Installation Issues: Ensure all dependencies are correctly installed. Consider checking for version compatibility.

- Model Output Not as Expected: Revisit the selection of the specific SAE and confirm that your data aligns with the model’s requirements.

- Performance Lag: If the analysis takes too long, verify the computational resources are optimal for running the models.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.