In the ever-evolving world of artificial intelligence, advancements in video classification are paving the way for innovative solutions and analyses. One of the groundbreaking tools in this domain is the VideoMAE model. This blog provides a comprehensive guide on how to use this large-sized pre-trained model effectively, including troubleshooting tips to maximize your experience.

What is VideoMAE?

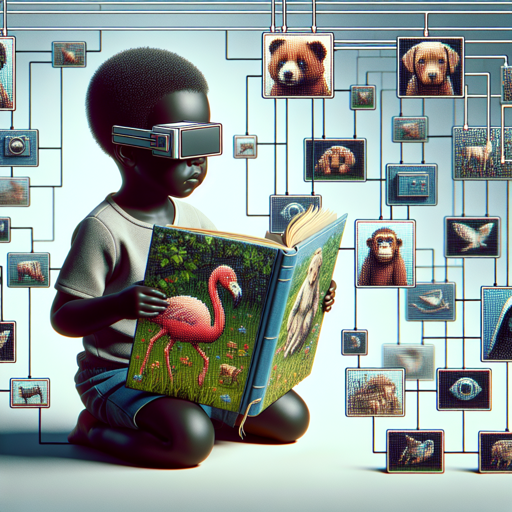

VideoMAE, short for Video Masked Autoencoders, extends the principles of Masked Autoencoders (MAE) to video content. Imagine teaching a child how to recognize animals using a picture book. Instead of showing them the whole image, you cover parts of it and ask them to identify the hidden animals based solely on the features they can see. This is akin to how VideoMAE learns: it observes videos in pieces, learns to reconstruct the missing segments, and builds a robust internal representation of the video content.

Features and Applications

VideoMAE has the following key capabilities:

- Predicts pixel values for masked patches of video sequences.

- Can be fine-tuned for various downstream tasks, such as video classification.

- Utilizes a transformer architecture similar to Vision Transformers (ViT).

How to Use VideoMAE

To leverage this powerful model for predicting pixel values for randomly masked patches of a video, follow these simple steps:

python

from transformers import VideoMAEImageProcessor, VideoMAEForPreTraining

import numpy as np

import torch

num_frames = 16

video = list(np.random.randn(16, 3, 224, 224)) # Random video tensor

processor = VideoMAEImageProcessor.from_pretrained("MCG-NJU/videomae-large")

model = VideoMAEForPreTraining.from_pretrained("MCG-NJU/videomae-large")

pixel_values = processor(video, return_tensors="pt").pixel_values

num_patches_per_frame = (model.config.image_size // model.config.patch_size) ** 2

seq_length = (num_frames * model.config.tubelet_size) * num_patches_per_frame

bool_masked_pos = torch.randint(0, 2, (1, seq_length)).bool()

outputs = model(pixel_values, bool_masked_pos=bool_masked_pos)

loss = outputs.loss

Understanding the Code

In our analogy with the child and the picture book, we can break down the code into steps to clarify its function:

- Import Libraries: Like gathering the materials needed to teach, you start by importing the necessary libraries.

- Create a Random Video: You simulate the video by generating random data, akin to showing the child multiple varied pictures.

- Processor and Model Setup: Here, you prepare the environment and tools necessary for learning, similar to laying out the picture book.

- Pixel Values Processing: Just as you would decode the pictures for the child, the code reflects processing the video data.

- Masked Positions: Randomly picking parts of the video to hide is like covering parts of the images for the child to guess.

- Model Output: Finally, the model assesses the video and calculates loss, which indicates how well it reconstructed the hidden portions – the model’s learning success.

Troubleshooting Tips

If you encounter issues while using VideoMAE, consider the following:

- Ensure all library versions are up to date.

- Check if the input video tensor is structured correctly (dimensions should match the model requirements).

- Look for insights on the model’s documentation if results are not as expected.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

VideoMAE represents a significant step forward in the field of video classification and analysis. By effectively utilizing this model, you can enhance your projects with state-of-the-art video understanding capabilities.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.