Introduction

In the intriguing world of AI and machine learning, the QCPG++ Dataset presents a remarkable opportunity for researchers and developers alike. This blog post is designed to walk you through the various components of the QCPG++ dataset, providing a detailed exploration of various text diversity metrics and the results obtained from the training process.

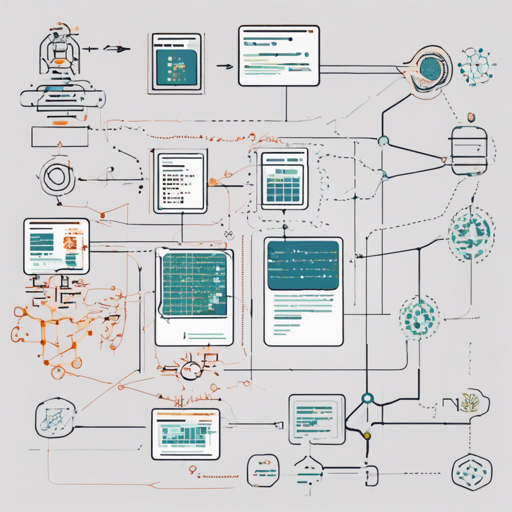

Understanding the QCPG++ Dataset

The QCPG++ Dataset is built on the foundation of MSCOCO and aims to enhance the quality of text generation through diversity metrics. Imagine you’re a chef in a kitchen, tasked with making the perfect dish. Your ingredients represent the data points in QCPG++, while your culinary skills reflect the metrics you measure to ensure that the outcomes are deliciously diverse and appealing. In this case, the dish is the final outcome of text generation, and the diversity metrics ensure that the flavors of words vary adequately.

Text Diversity Metrics

To craft compelling and varied text, several dimensions of diversity are measured. The key metrics include:

- Semantic Similarity: Measured using the Bleurt Score, this metric analyzes how close generated texts are in meaning to reference texts.

- Syntactic Diversity: Evaluated through the Constituency Parse Tree edit distance, this metric explores structural variations.

- Lexical Diversity: Assessed using character-level edit distance, it ensures that the vocabulary used is varied.

- Phonological Diversity: Looks into the rhythmic diversity within the generated text.

- Morphological Diversity: Measured by the POS (Part of Speech) edit distance, this metric evaluates how different types of words are utilized in the text.

Results of the QCPG++ Dataset Training

After implementing the dataset for training, several important outcomes were observed:

- Train Loss: 1.4309

- Dev Loss: 1.765

- Dev BLEU: 11.7859

These results offer insight into how well the model is performing. The Train Loss reflects how well the model fits the training data while the Dev Loss indicates its performance on unseen validation data. The Dev BLEU score is a popular metric for evaluating the quality of generated text against reference texts, with higher values indicating better performance.

Troubleshooting Insights

As with any technical project, you may encounter some hurdles along the way. Here are a few troubleshooting tips to ensure smooth sailing with your implementation:

- If you experience a high train loss compared to dev loss, consider adjusting your learning rate. A learning rate of 1e-4 is generally effective, but experimenting with different values can help.

- When the BLEU score does not improve, ensure your training set is sufficiently diverse. Inadequate variety in the input can significantly hinder performance.

- To assess the metrics accurately, make sure your implementation of the Bleurt score and parse tree algorithms are correct. Issues in this area can lead to misleading results.

- For any persistent issues, engage with the vibrant community of AI developers or consider looking at various forums online.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

The QCPG++ Dataset offers a powerful toolkit for improving text generation through the leverage of various diversity metrics. Just as a skilled chef uses diverse ingredients to create exceptional dishes, researchers can utilize these metrics to refine their models and enhance their outputs. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.